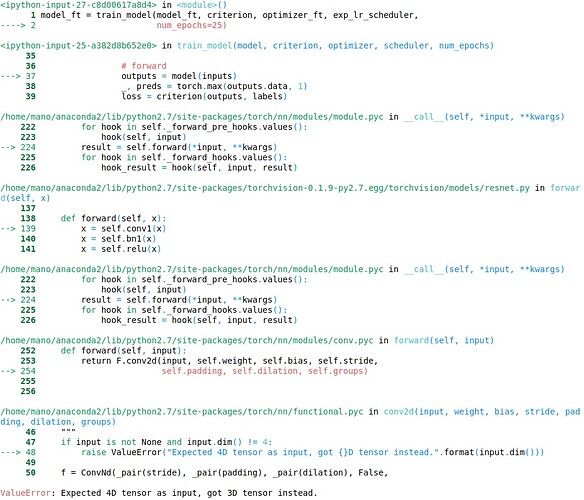

I using a pre-trained resnet18 for my application. But I’m getting the above error. The input image size is [torch.FloatTensor of size 2x75x75]. I have attached the error screenshot below

. Can anyone point out a possible solution for this problem? Thank you.Pytorch pretrained models get 4D inputs: the first dimension is the number of examples that you are want to process (batch dimension) and then your images: 3 channels and size 224x224

Hi, thanks for the reply. Is there a way i could use an image of 2x75x75 dimension on this model?

Got it, i used the Transform functionality to rescale the images. Thank you.

Torch reads images as (batch_size , channel, width, height), so if you want to feed in one image at a time, add the line

t.unsqueeze(0)

This will make the image 1X2X75X75 i.e. one image with 2 channels of 75X75 each.

But the model would only accept images of size 3x224x224. Isn’t that right"

The model will only accept 4D tensor of the kind (batch_size, channel, size,size)

so it will take in 1x3x224x224 if you give it one image at a time, or 10x3x224x224 if you give it 10 images at a time (i.e. batch size is 10).

While training it makes no sense to give one image at a time as it will make training insanely slow. If you want to pass one image at a time during inference, you should pass it in as a 4D tensor as I mentioned. For that you can use the unsqueeze() to add a dimension.