I initialize the nn model with

model = ASRModel(idim_list[0] if args.num_encs == 1 else idim_list, odim, args)

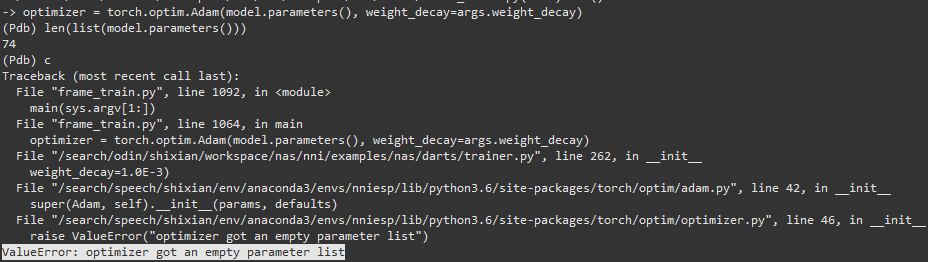

and model.parameters() returns the parameter list correctly. But when I declare an optimizer

optimizer = torch.optim.Adam(model.parameters(), weight_decay=args.weight_decay),

an error occurs.

I found that the init function for base class for Optimizer will run twice and the len(param_groups) will be 0 in the second time. Why?

Are you recreating the optimizer somewhere in your code or changing the parameters of the model?

If you get stuck, could you post an executable code snippet to reproduce this issue, please?