Hi there @ptrblck thanks for the answer, i’ve implemented nn.BCEWithLogitsLoss i actually had it before but wasn’t sure about it’s effectiveness. So i’ve tried to apply the threshold as you said and the acc changed every epoch the problem is it’s values shot up to ridiculous valous in the thousands. I’ve been digging around information about sigmoid and softmax to see what i should do but it’s very confusing maybe i should’ve explained my situation better. At the moment i have 1 output with 2 possible classes i want to know if my patient is healthy or pathological so it’s basically a binary output like you mentioned. Also i should have said this but my data is not scaled. I wonder if you have any new advice with this information? Thanks a lot.

Applying torch.sigmoid on the one output logit and using a threshold to get the predictions would still be valid.

If the accuracy is too high I guess you might compare the correctly predicted samples to a wrong baseline (e.g. number of batches instead of number of samples?).

@ptrblck Hi there! I am new to pytorch and have a similar problem. I wonder if you could help me.

There are 762 images in my dataset, each of size (1,160,160). I am trying to build a GAN and the code for Generator and Discriminator class is as follows:

batch_size = 64

class Generator(nn.Module):

def init(self, nz):

super(Generator, self).init()

self.nz = nz

self.main = nn.Sequential(

nn.Flatten(),

nn.Linear(self.nz, 256),

nn.LeakyReLU(0.2),

nn.Linear(256, 512),

nn.LeakyReLU(0.2),

nn.Linear(512, 1024),

nn.LeakyReLU(0.2),

nn.Linear(1024, batch_size),

nn.Tanh(),

)

def forward(self, x):

return self.main(x).view(-1, 1, 8, 8)

class Discriminator(nn.Module):

def init(self):

super(Discriminator, self).init()

self.n_input = batch_size

self.main = nn.Sequential(

nn.Flatten(),

nn.Linear(self.n_input, 1024),

nn.LeakyReLU(0.2),

nn.Dropout(0.3),

nn.Linear(1024, 512),

nn.LeakyReLU(0.2),

nn.Dropout(0.3),

nn.Linear(512, 256),

nn.LeakyReLU(0.2),

nn.Dropout(0.3),

nn.Linear(256, 1),

nn.Sigmoid(),

)

def forward(self, x):

x = x.view(-1, batch_size)

return self.main(x)

generator = Generator(nz).to(device)

discriminator = Discriminator().to(device)

But when I try to train it, it gives the following error:

Using a target size (torch.Size([64, 1])) that is different to the input size (torch.Size([304800, 1])) is deprecated. Please ensure they have the same size.

I don’t understand where the 304800 came from. Could you help me solve the issue? Thanks!

I don’t quite understand why you are using the batch_size as the out_features of the linear layer in the Generator and are also reshaping the activation such that the batch_size is in dim1.

PyTorch layers expect (in the majority or layers) the batch dimension to be in dim0. Also, layer features (input and output features) do not depend on the batch size.

My mistake. I suppose the output should be channel x height x width of the image. But then the size of my images are huge, which is (1,160,160). So I guess I should first reduce it to a smaller size and then use this reduced size as the out_feature of the linear layer in the Generator.

Hello everyone,

I’m developing a project about the lecture of X-rays images of COVID-19 patients.

It’s my first time developing a Deep Learning project.

I’m adapting the original code that my professors supplied to me.

The task I’m currently stuck is on the training of a CNN with a lungs detector.

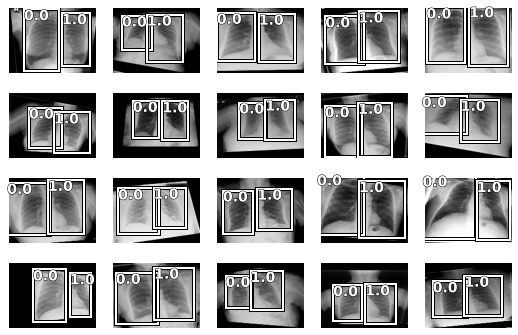

Previously I’ve been able to create a DataBlock() object and show a batch from the dataset with the lungs of all images inside bounding boxes and with their appropiate labels as you can see in the next image (label 0.0 for bbox left & label 1.0 for bbox right):

The issue I have is when I run the cell of the function learn.lr_find() I get the following error:

RuntimeError: The size of tensor a (64) must match the size of tensor b (2) at non-singleton dimension 1.

I also get the following warning:

Python\Python37\lib\site-packages\torch_tensor.py:1051: UserWarning: Using a target size (torch.Size([64, 2, 4])) that is different to the input size (torch.Size([64, 4])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

I found out this topic while searching for information about the error and I’ve readen the topic but I don’t know what I have to change to make the code run successfully.

The key parts of my code are:

Creating the dataset

data = DataBlock(

blocks=(ImageBlock, BBoxBlock,BBoxLblBlock), # ImageBlock means type of inputs are images; BBoxBlock & BBoxLblBlock = type of targets are BBoxes & their labels

get_items=get_image_files,

n_inp=1, # number of inputs; it's 1 because the only inputs are the rx images (ImageBlock)

get_y=get_y, # get_y = targets [bboxes, labels]; get_x = inputs

splitter = RandomSplitter (0.1), # split training/validation; parameter 0.1 means there will be 10% of validation images

batch_tfms= [*aug_transforms(do_flip=False, size=(120,160)), Normalize.from_stats(*imagenet_stats)]

)

dls = data.dataloaders(path_dl, path=path_dl, bs = 64) # NOTE: what is the meaning of the bs argument

dls.show_batch(max_n=20, figsize=(9,6))

Train the model

def intersection(preds, targs):

# preds and targs are of shape (bs, 4), pascal_voc format

max_xy = torch.min(preds[:, 2:], targs[:, 2:])

min_xy = torch.max(preds[:, :2], targs[:, :2])

inter = torch.clamp((max_xy - min_xy), min=0)

return inter[:, 0] * inter[:, 1]

def area(boxes):

return ((boxes[:, 2]-boxes[:, 0]) * (boxes[:, 3]-boxes[:, 1]))

def union(preds, targs):

return area(preds) + area(targs) - intersection(preds, targs)

def IoU(preds, targs):

return intersection(preds, targs) / union(preds, targs)

SMOOTH = 1e-6

def iou_rm(outputs: torch.Tensor, labels: torch.Tensor):

# You can comment out this line if you are passing tensors of equal shape

# But if you are passing output from UNet or something it will most probably

# be with the BATCH x 1 x H x W shape

# rm error not being byte

outputs = outputs > 0.5

labels = labels.Byte()

outputs = outputs.squeeze(1) # BATCH x 1 x H x W => BATCH x H x W

intersection = (outputs & labels).float().sum((1, 2)) # Will be zero if Truth=0 or Prediction=0

union = (outputs | labels).float().sum((1, 2)) # Will be zzero if both are 0

iou = (intersection + SMOOTH) / (union + SMOOTH) # We smooth our devision to avoid 0/0

thresholded = torch.clamp(20 * (iou - 0.5), 0, 10).ceil() / 10 # This is equal to comparing with thresolds

return thresholded # Or thresholded.mean() if you are interested in average across the batch

# Numpy version

# Well, it's the same function, so I'm going to omit the comments

def iou_np(outputs: np.array, labels: np.array):

outputs = outputs.squeeze(1)

intersection = (outputs & labels).sum((1, 2))

union = (outputs | labels).sum((1, 2))

iou = (intersection + SMOOTH) / (union + SMOOTH)

thresholded = np.ceil(np.clip(20 * (iou - 0.5), 0, 10)) / 10

return thresholded # Or thresholded.mean()

class LungDetector(nn.Module):

def __init__(self, arch=models.resnet18): # was 18

super().__init__()

self.cnn = create_body(arch)

print(num_features_model(self.cnn))

self.head = create_head(num_features_model(self.cnn), 4)

def forward(self, im):

x = self.cnn(im)

x = self.head(x)

return 2 * (x.sigmoid_() - 0.5)

def loss_fn(preds, targs, class_idxs):

return L1Loss()(preds, targs.squeeze())

learn = Learner(dls, LungDetector(arch=models.resnet50), loss_func=loss_fn)

learn.metrics = [lambda preds, targs, _: IoU(preds, targs.squeeze()).mean()]

learn._split([learn.model.cnn[:6], learn.model.cnn[6:], learn.model.head]) # NOTE: why :6 or 6: ?

learn.freeze_to(-1)

# To run this cell it's necessary to have a Nvidia GPU

# I tried running it on a PC with a different GPU and the cell didn't end the execution

learn.lr_find()

learn.recorder.plot()

The stack trace error is:

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-63-606127485fbd> in <module>

2 # I tried running it on a PC with a different GPU and the cell didn't end the execution

3

----> 4 learn.lr_find()

5 learn.recorder.plot()

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\callback\schedule.py in lr_find(self, start_lr, end_lr, num_it, stop_div, show_plot, suggest_funcs)

283 n_epoch = num_it//len(self.dls.train) + 1

284 cb=LRFinder(start_lr=start_lr, end_lr=end_lr, num_it=num_it, stop_div=stop_div)

--> 285 with self.no_logging(): self.fit(n_epoch, cbs=cb)

286 if suggest_funcs is not None:

287 lrs, losses = tensor(self.recorder.lrs[num_it//10:-5]), tensor(self.recorder.losses[num_it//10:-5])

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in fit(self, n_epoch, lr, wd, cbs, reset_opt)

219 self.opt.set_hypers(lr=self.lr if lr is None else lr)

220 self.n_epoch = n_epoch

--> 221 self._with_events(self._do_fit, 'fit', CancelFitException, self._end_cleanup)

222

223 def _end_cleanup(self): self.dl,self.xb,self.yb,self.pred,self.loss = None,(None,),(None,),None,None

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _with_events(self, f, event_type, ex, final)

161

162 def _with_events(self, f, event_type, ex, final=noop):

--> 163 try: self(f'before_{event_type}'); f()

164 except ex: self(f'after_cancel_{event_type}')

165 self(f'after_{event_type}'); final()

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _do_fit(self)

210 for epoch in range(self.n_epoch):

211 self.epoch=epoch

--> 212 self._with_events(self._do_epoch, 'epoch', CancelEpochException)

213

214 def fit(self, n_epoch, lr=None, wd=None, cbs=None, reset_opt=False):

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _with_events(self, f, event_type, ex, final)

161

162 def _with_events(self, f, event_type, ex, final=noop):

--> 163 try: self(f'before_{event_type}'); f()

164 except ex: self(f'after_cancel_{event_type}')

165 self(f'after_{event_type}'); final()

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _do_epoch(self)

204

205 def _do_epoch(self):

--> 206 self._do_epoch_train()

207 self._do_epoch_validate()

208

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _do_epoch_train(self)

196 def _do_epoch_train(self):

197 self.dl = self.dls.train

--> 198 self._with_events(self.all_batches, 'train', CancelTrainException)

199

200 def _do_epoch_validate(self, ds_idx=1, dl=None):

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _with_events(self, f, event_type, ex, final)

161

162 def _with_events(self, f, event_type, ex, final=noop):

--> 163 try: self(f'before_{event_type}'); f()

164 except ex: self(f'after_cancel_{event_type}')

165 self(f'after_{event_type}'); final()

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in all_batches(self)

167 def all_batches(self):

168 self.n_iter = len(self.dl)

--> 169 for o in enumerate(self.dl): self.one_batch(*o)

170

171 def _do_one_batch(self):

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in one_batch(self, i, b)

192 b = self._set_device(b)

193 self._split(b)

--> 194 self._with_events(self._do_one_batch, 'batch', CancelBatchException)

195

196 def _do_epoch_train(self):

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _with_events(self, f, event_type, ex, final)

161

162 def _with_events(self, f, event_type, ex, final=noop):

--> 163 try: self(f'before_{event_type}'); f()

164 except ex: self(f'after_cancel_{event_type}')

165 self(f'after_{event_type}'); final()

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\learner.py in _do_one_batch(self)

173 self('after_pred')

174 if len(self.yb):

--> 175 self.loss_grad = self.loss_func(self.pred, *self.yb)

176 self.loss = self.loss_grad.clone()

177 self('after_loss')

<ipython-input-59-bf592cbdc4b7> in loss_fn(preds, targs, class_idxs)

1 def loss_fn(preds, targs, class_idxs):

----> 2 return L1Loss()(preds, targs.squeeze())

~\AppData\Local\Programs\Python\Python37\lib\site-packages\torch\nn\modules\module.py in _call_impl(self, *input, **kwargs)

1100 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1101 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1102 return forward_call(*input, **kwargs)

1103 # Do not call functions when jit is used

1104 full_backward_hooks, non_full_backward_hooks = [], []

~\AppData\Local\Programs\Python\Python37\lib\site-packages\torch\nn\modules\loss.py in forward(self, input, target)

94

95 def forward(self, input: Tensor, target: Tensor) -> Tensor:

---> 96 return F.l1_loss(input, target, reduction=self.reduction)

97

98

~\AppData\Local\Programs\Python\Python37\lib\site-packages\torch\nn\functional.py in l1_loss(input, target, size_average, reduce, reduction)

3066 if has_torch_function_variadic(input, target):

3067 return handle_torch_function(

-> 3068 l1_loss, (input, target), input, target, size_average=size_average, reduce=reduce, reduction=reduction

3069 )

3070 if not (target.size() == input.size()):

~\AppData\Local\Programs\Python\Python37\lib\site-packages\torch\overrides.py in handle_torch_function(public_api, relevant_args, *args, **kwargs)

1353 # Use `public_api` instead of `implementation` so __torch_function__

1354 # implementations can do equality/identity comparisons.

-> 1355 result = torch_func_method(public_api, types, args, kwargs)

1356

1357 if result is not NotImplemented:

~\AppData\Local\Programs\Python\Python37\lib\site-packages\fastai\torch_core.py in __torch_function__(self, func, types, args, kwargs)

338 convert=False

339 if _torch_handled(args, self._opt, func): convert,types = type(self),(torch.Tensor,)

--> 340 res = super().__torch_function__(func, types, args=args, kwargs=kwargs)

341 if convert: res = convert(res)

342 if isinstance(res, TensorBase): res.set_meta(self, as_copy=True)

~\AppData\Local\Programs\Python\Python37\lib\site-packages\torch\_tensor.py in __torch_function__(cls, func, types, args, kwargs)

1049

1050 with _C.DisableTorchFunction():

-> 1051 ret = func(*args, **kwargs)

1052 if func in get_default_nowrap_functions():

1053 return ret

~\AppData\Local\Programs\Python\Python37\lib\site-packages\torch\nn\functional.py in l1_loss(input, target, size_average, reduce, reduction)

3078 reduction = _Reduction.legacy_get_string(size_average, reduce)

3079

-> 3080 expanded_input, expanded_target = torch.broadcast_tensors(input, target)

3081 return torch._C._nn.l1_loss(expanded_input, expanded_target, _Reduction.get_enum(reduction))

3082

~\AppData\Local\Programs\Python\Python37\lib\site-packages\torch\functional.py in broadcast_tensors(*tensors)

70 if has_torch_function(tensors):

71 return handle_torch_function(broadcast_tensors, tensors, *tensors)

---> 72 return _VF.broadcast_tensors(tensors) # type: ignore[attr-defined]

73

74

RuntimeError: The size of tensor a (64) must match the size of tensor b (2) at non-singleton dimension 1

I would gradly appreciate if anyone can give me any clue of how to solve the error stated before.

Thank you.

Marc

I would recommend to fix the first warning:

UserWarning: Using a target size (torch.Size([64, 2, 4])) that is different to the input size (torch.Size([64, 4])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

by checking the model output shape and the target shape.

As described in the warning, the shape mismatch will broadcast your tensors, which would yield wrong results.

Since you are using L1Loss make sure the output and targets have the same shape.

Once this is solved, check if you are reshaping the activation tensors inside your forward method, as it seems that the other shape mismatch error is raised after the batch size of one tensor was changed.

Thank you so much for your suggestions.

Yesterday I had a meeting with my professors and they told me to focus first on making the notebook they implemented run succesfully and to correct the functions that are outdated.

On the original notebook, for each image of the dataset there is only one bounding box that encapsulates the 2 lungs.

When I’ve run all the original notebook successfully I’ll then focus on understanding it’s code completely with the use, for example, of the book I found out this week called “Deep learning for coders with fastai and pytorch”:

Finally, when I’ve understood all the functions of the original notebook, specially the ones of the training part, I’ll comeback to my version of the original notebook and with the knowledge I’ll have from the book and from research I’ll try to solve the error I stated in this topic taking into account your suggestions.

I’ll let you know if I solved the error and how I solved it.

That sounds like a great idea! Keep us updated how it goes ![]()

Hi @ptrblck,

I inform you I’m currently trying to fix the same error I commented on this topic more than 2 weeks ago.

As I told you I’d do, I’ve readen and taken notes of the chapters of the book “Deep learning for coders with fastai and pytorch” that I found more relevant for my project.

After reading the book I focused on making functional the original notebook with generates 1 bbox for each image of the dataset. I managed to make it functional last week and since last friday I’m back on the modified version of the original notebook with generates 2 bboxes for each image, 1 for the left lung and another for the right lung.

If you can help me find a solution to the error I’d appreciate if you can take a look at the topic where I exposed my problem with more details (the most important messages are #7 & #8). Topic’s link: Multi Object Detection - Error lr_find() fastai v2 - fastai users - Deep Learning Course Forums.

You can reply to me on this topic or on the fast.ai topic.

Hi @ptrblck,

I inform you that the error for which I asked you for help has been solved.

I managed to solve it by following the indications of a user from the fast.ai topic on which I exposed my problem.

I made the target tensor size as (8,) which contains the coordinates of both lungs. It has to have this size because the prediction tensor size is (8,) due to the fact that on the create_head() method of the custom model I set the number of outputs to 8.

I had to make a reshape of the targets tensor before loading it to the DataBlock because if not it would be reshape as (2,4) and it’s size wouldn’t match with the predictions tensor size.

Plus, I removed the labels (0 - left lung, 1 - right lung) because I notice I don’t need them, my task it’s just about the detection of the lungs and not about classification. The use of labels would be a problem because the labels list size (2,) wouldn’t match with the size of the targets and predictions (8,); there would be more labels than expected.

Regards,

Marc

Hi, you are nice guy.

i have met the same question when i ran my code, when i run to before_train = criterion(y_pred.squeeze(), test_output)

···

#!/usr/bin/env python

coding: utf-8

import pandas as pd

file_path = “data/shunfenbu1_huice_from_mongo.csv”

df = pd.read_csv(file_path)

columns = [“lunci”, “home_rank”, “away_rank”, “wangji_win”, “wangji_draw”, “wangji_loss”, “home_win”, “home_draw”,

“home_loss”, “away_win”, “away_draw”, “away_loss”, “start_win_sp”, “start_draw_sp”, “start_loss_sp”,

“end_win_sp”, “end_draw_sp”, “end_loss_sp”, “score”, ]

df_new = df.loc[:, columns]

def add_column_with_value_according_spec_col(source):

target = source.copy() # make a copy from source

target[‘result’] = 3 # create a new column with any value

for i, rows in target.iterrows():

home_score, away_score = rows[‘score’].split(“-”)

if home_score == away_score:

rows[‘result’] = 1

if int(home_score) > int(away_score):

rows[‘result’] = 3

if int(home_score) < int(away_score):

rows[‘result’] = 0

return target # return all rows, the last column

df_after_dealed = add_column_with_value_according_spec_col(df_new)

df_after_dealed.drop(‘score’, axis=1, inplace=True)

training = df_after_dealed.iloc[:-10] # select all the rows except the last 10

test = df_after_dealed.iloc[-10:] # elect the last 10 rows

print(training[:3]) # print to check

print(test[:3]) # print to test

normalize the data between 0 and 1

for e in range(len(training.columns)): # iterate for each column

num = max(training.iloc[:, e].max(), test.iloc[:, e].max()) # check the maximum value in each column

if num < 10:

training.iloc[:, e] /= 10

test.iloc[:, e] /= 10

elif num < 100:

training.iloc[:, e] /= 100

test.iloc[:, e] /= 100

elif num < 1000:

training.iloc[:, e] /= 1000

test.iloc[:, e] /= 1000

else:

print(“Error in normalization! Please check!”)

print(training[:3]) # print to check

print(test[:3]) # print to check

training = training.sample(frac=1) # shuffle the training data

test = test.sample(frac=1) # shuffle the test data

print(training[:3]) # print to check

print(test[:3]) # print to check

all rows, all columns except for the last 3 columns

training_input = training.iloc[:, :-1]

all rows, the last 3 columns

training_output = training.iloc[:, -1:]

print(training_output)

all rows, all columns except for the last 3 columns

test_input = test.iloc[:, :-1]

all rows, the last 3 columns

test_output = test.iloc[:, -1:]

print(test_input) #print to check

print(test_output) # print to check

import torch

class Net(torch.nn.Module):

def init(self, input_size, hidden_size):

super(Net, self).init()

self.input_size = input_size

self.hidden_size = hidden_size

self.fc1 = torch.nn.Linear(self.input_size, self.hidden_size)

self.relu = torch.nn.ReLU()

self.fc2 = torch.nn.Linear(self.hidden_size, 1)

self.sigmoid = torch.nn.Sigmoid()

def forward(self, x):

hidden = self.fc1(x)

relu = self.relu(hidden)

output = self.fc2(relu)

output = self.sigmoid(output)

output = output.squeeze(-1) # 根据文档https://blog.csdn.net/weixin_44482737/article/details/125636441 添加此行代码

return output

print(training_input.values)

training_input = torch.FloatTensor(training_input.values)

training_output = torch.FloatTensor(training_output.values)

test_input = torch.FloatTensor(test_input.values)

test_output = torch.FloatTensor(test_output.values)

print(input_size, hidden_size)

input_size = training_input.size()[1] # number of features selected

hidden_size = 30 # number of nodes/neurons in the hidden layer, 不明白这里为什么是30

model = Net(input_size, hidden_size) # create the model

criterion = torch.nn.BCELoss(reduction=‘mean’) # works for binary classification

without momentum parameter

optimizer = torch.optim.SGD(model.parameters(), lr=0.9)

with momentum parameter

optimizer = torch.optim.SGD(model.parameters(), lr=0.9, momentum=0.2)

model.eval()

y_pred = model(test_input)

before_train = criterion(y_pred.squeeze(), test_output)

print(‘Test loss before training’, before_train.item())

print(y_pred.size())

test_output.size()

model.train()

epochs = 5000

errors = []

for epoch in range(epochs):

optimizer.zero_grad()

# Forward pass

y_pred = model(training_input)

# Compute Loss

loss = criterion(y_pred, training_output)

errors.append(loss.item())

print(‘Epoch {}: train loss: {}’.format(epoch, loss.item()))

# Backward pass

loss.backward()

optimizer.step()

model.eval()

y_pred = model(test_input)

after_train = criterion(y_pred, test_output)

import matplotlib.pyplot as plt

import numpy as np

def plotcharts(errors):

errors = np.array(errors)

plt.figure(figsize=(12, 5))

graf02 = plt.subplot(1, 2, 1) # nrows, ncols, index

graf02.set_title(‘Errors’)

plt.plot(errors, ‘-’)

plt.xlabel(‘Epochs’)

graf03 = plt.subplot(1, 2, 2)

graf03.set_title(‘Tests’)

a = plt.plot(test_output.numpy(), ‘yo’, label=‘Real’)

plt.setp(a, markersize=10)

a = plt.plot(y_pred.detach().numpy(), ‘b+’, label=‘Predicted’)

plt.setp(a, markersize=10)

plt.legend(loc=7)

plt.show()

plotcharts(errors)

···

I guess the squeeze operation might create the shape mismatch error.

Did you check the shape of y_pred and test_output and could you explain why you are squeezing y_pred?

please, can you help with this problem. this is the error: ValueError: Using a target size (torch.Size([4])) that is different to the input size (torch.Size([4, 3])) is deprecated. Please ensure they have the same size.

And this is my code

def init(self):

self.x = torch.arange(-2, 2, 0.1).view(-1, 1)

self.y = torch.zeros(self.x.shape[0])

self.y[(self.x > -1.0)[:, 0] * (self.x < 1.0)[:, 0]] = 1

self.y[(self.x >= 1.0)[:, 0]] = 2

self.y = self.y.type(torch.LongTensor)

self.len = self.x.shape[0]

# Getter

def getitem(self,index):

return self.x[index], self.y[index]

# Get Length

def len(self):

return self.len

dataset = Data()

data = DataLoader(dataset, batch_size = 4)

class classifier(nn.Module):

def init(self):

super().init()

self.layer_1 = nn.Linear(in_features = 1, out_features = 3)

def forward(self,x):

x = self.layer_1(x)

return x

model = classifier()

criterion = nn.BCELoss()

optimizer = torch.optim.Adam(model.parameters(), lr = 0.01)

manual_seed = 20

epochs = 100

for x,y in data:

for epoch in range(epochs):

y_hat = model(x)

loss = criterion(y_hat, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

nn.BCELoss expects model outputs and targets in the same shape as indicated in the error message and explained in the docs.

Based of the error message it seems you are working on a multi-label classification use case where each sample can contain zero, one, or multiple classes (up to 3) indicated by the model output shape of [4, 3]. However, the target is expected to have the same shape indicating which classes are active.

Your target tensor is missing the feature dimension, so use target = target.unsqueeze(1) to unsqueeze dim1 and the warning should disappear.

can you explain it more? I don’t understand what should I do?

You would need to unsqueeze dim1 as shown in my example in y before the loss calculation:

loss = criterion(y_val_pred, y)

I do this but still I have this warning:

C:\Users\seyed\anaconda3\lib\site-packages\torch\nn\modules\loss.py:536: UserWarning: Using a target size (torch.Size([128])) that is different to the input size (torch.Size([128, 1])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

return F.mse_loss(input, target, reduction=self.reduction)

C:\Users\seyed\anaconda3\lib\site-packages\torch\nn\modules\loss.py:536: UserWarning: Using a target size (torch.Size([92])) that is different to the input size (torch.Size([92, 1])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

return F.mse_loss(input, target, reduction=self.reduction)

and I have another question, If my columns are 47, and my dataset has csv format, and if I want to choose input dim for my LSTM network, what should I choose? 46?(If 46 columns are features and one is target) @ptrblck