# from __future__ import print_function

# #%matplotlib inline

# import argparse

import os

import PIL

from PIL import Image

# import glob

# import xml.etree.ElementTree as ET

import random

import torch

import torch.nn as nn

# import torch.nn.parallel

# import torch.backends.cudnn as cudnn

import torch.optim as optim

import torch.utils.data

import torchvision.datasets as dset

import torchvision.transforms as transforms

import torchvision.transforms.functional as tf

import torchvision.utils as vutils

import numpy as np

import matplotlib.pyplot as plt

# import matplotlib.animation as animation

# import seaborn as sns

# from IPython.display import HTML

from torchvision.utils import save_image

# from torch.optim.lr_scheduler import StepLR, ReduceLROnPlateau, CosineAnnealingLR

# from tqdm import tqdm_notebook as tqdm

# from IPython.display import clear_output

# from scipy.stats import truncnorm

%matplotlib inline

# plt.rcParams['image.interpolation'] = 'nearest'

dataloader = torch.utils.data.DataLoader(dataset, batch_size=batch_size,shuffle=True, num_workers=workers)

# Always good to check if gpu support available or not

# Decide which device we want to run on

device = torch.device("cuda:0" if (torch.cuda.is_available() and ngpu > 0) else "cpu")

print(device)

# visualization batch image

real_batch = iter(dataloader).next()

plt.figure(figsize=(25,15)) #img size 25x15(hxw)

plt.axis("off")

plt.title("Training Images")

image = np.transpose(vutils.make_grid(real_batch[0].to(device)[:4], normalize=True).cpu(),axes=(1,2,0)) #4 img show

plt.imshow(image)

# image = np.transpose(vutils.make_grid(real_batch[0].to(device), normalize=True).cpu(),axes=(1,2,0))

# plt.imshow(image)

def weights_init(m):

classname = m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find('BatchNorm') != -1:

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0)

class Generator(nn.Module):

def __init__(self, ngpu, nz=nz, ngf=ngf, nc=nc, n_class=n_class):

super(Generator, self).__init__()

self.ngpu = ngpu

self.ReLU = nn.ReLU(True)

self.Tanh = nn.Tanh()

self.conv1 = nn.ConvTranspose2d(nz+n_class, ngf * 8, 4, 1, 0, bias=False)

self.BatchNorm1 = nn.BatchNorm2d(ngf * 8)

self.conv2 = nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False)

self.BatchNorm2 = nn.BatchNorm2d(ngf * 4)

self.conv3 = nn.ConvTranspose2d(ngf * 4, ngf * 2, 4, 2, 1, bias=False)

self.BatchNorm3 = nn.BatchNorm2d(ngf * 2)

self.conv4 = nn.ConvTranspose2d(ngf * 2, ngf * 1, 4, 2, 1, bias=False)

self.BatchNorm4 = nn.BatchNorm2d(ngf * 1)

self.conv5 = nn.ConvTranspose2d(ngf * 1, nc, 4, 2, 1, bias=False)

self.apply(weights_init)

def forward(self, input):

x = self.conv1(input)

x = self.BatchNorm1(x)

x = self.ReLU(x)

x = self.conv2(x)

x = self.BatchNorm2(x)

x = self.ReLU(x)

x = self.conv3(x)

x = self.BatchNorm3(x)

x = self.ReLU(x)

x = self.conv4(x)

x = self.BatchNorm4(x)

x = self.ReLU(x)

x = self.conv5(x)

output = self.Tanh(x)

return output

# Create the generator

netG = Generator(ngpu).to(device)

# Apply the weights_init function to randomly initialize all weights

netG.apply(weights_init)

# Print the model

print(netG)

class Discriminator(nn.Module):

def __init__(self, ngpu, ndf=ndf, nc=nc, n_class=n_class):

super(Discriminator, self).__init__()

self.LeakyReLU = nn.LeakyReLU(0.2, inplace=True)

self.conv1 = nn.Conv2d(nc, ndf, 4, 2, 1, bias=False)

self.conv2 = nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False)

self.BatchNorm2 = nn.BatchNorm2d(ndf * 2)

self.conv3 = nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False)

self.BatchNorm3 = nn.BatchNorm2d(ndf * 4)

self.conv4 = nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False)

self.BatchNorm4 = nn.BatchNorm2d(ndf * 8)

self.conv5 = nn.Conv2d(ndf * 8, ndf * 1, 4, 1, 0, bias=False)

self.disc_linear = nn.Linear(ndf * 1, 1)

self.aux_linear = nn.Linear(ndf * 1, n_class)

self.softmax = nn.Softmax()

self.sigmoid = nn.Sigmoid()

self.ndf = ndf

self.apply(weights_init)

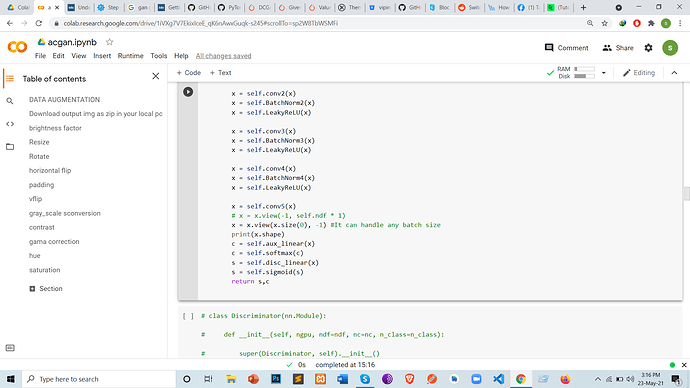

def forward(self, input):

x = self.conv1(input)

x = self.LeakyReLU(x)

x = self.conv2(x)

x = self.BatchNorm2(x)

x = self.LeakyReLU(x)

x = self.conv3(x)

x = self.BatchNorm3(x)

x = self.LeakyReLU(x)

x = self.conv4(x)

x = self.BatchNorm4(x)

x = self.LeakyReLU(x)

x = self.conv5(x)

# x = x.view(-1, self.ndf * 1)

x = x.view(x.size(0), -1) #It can handle any batch size

c = self.aux_linear(x)

c = self.softmax(c)

s = self.disc_linear(x)

s = self.sigmoid(s)

return s,c

# Create the Discriminator

netD = Discriminator(ngpu).to(device)

# Apply the weights_init function to randomly initialize all weights

netD.apply(weights_init)

# Print the model

print(netD)

# Setup Adam optimizers for both G and D

optimizerD = optim.Adam(netD.parameters(), lr=lr, betas=(beta1, 0.999))

optimizerG = optim.Adam(netG.parameters(), lr=lr, betas=(beta1, 0.999))

def onehot_encode(label, device, n_class=n_class):

eye = torch.eye(n_class, device=device)

return eye[label].view(-1, n_class, 1, 1)

def concat_image_label(image, label, device, n_class=n_class):

B, C, H, W = image.shape

oh_label = onehot_encode(label, device=device)

oh_label = oh_label.expand(B, n_class, H, W)

return torch.cat((image, oh_label), dim=1)

def concat_noise_label(noise, label, device):

oh_label = onehot_encode(label, device=device)

return torch.cat((noise, oh_label), dim=1)

def show_generated_img(num_show):

gen_images = []

for _ in range(num_show):

noise = torch.randn(1, nz, 1, 1, device=device) #4

covid_label = torch.randint(0, n_class, (1, ), device=device)

gen_image = concat_noise_label(noise, covid_label, device)

gen_image = netG(gen_image).to("cpu").clone().detach().squeeze(0)

# gen_image = gen_image.numpy().transpose(0, 2, 3, 1)

gen_image = gen_image.numpy().transpose(1, 2, 0)

gen_images.append(gen_image)

fig = plt.figure(figsize=(10, 5))

# fig = plt.figure(figsize=(299,299))

for i, gen_image in enumerate(gen_images):

ax = fig.add_subplot(1, num_show, i + 1, xticks=[], yticks=[])

plt.imshow(gen_image + 1 / 2)

print(gen_image.shape)

# plt.imshow(gen_image + 1 / 2, cmap="gray_r")

plt.show()

# Training Loop

# Lists to keep track of progress

G_losses = []

D_losses = []

fake_img_list=[]

iters = 0

print("Starting Training Loop...")

for epoch in range(num_epochs):

for i, data in enumerate(dataloader,0):

# prepare real image and label

real_label = data[1].to(device)

real_image = data[0].to(device)

b_size = real_label.size(0)

# prepare fake image and label

fake_label = torch.randint(n_class, (b_size,), dtype=torch.long, device=device)

noise = torch.randn(b_size, nz, 1, 1, device=device).squeeze(0)

noise = concat_noise_label(noise, real_label, device)

fake_image = netG(noise)

# target

real_target = torch.full((b_size,), r_label, device=device, dtype=torch.float)

fake_target = torch.full((b_size,), f_label, device=device, dtype=torch.float)

real_target = real_target.view(-1,1)

fake_target = fake_target.view(-1,1)

#-----------------------

# Update Discriminator

#-----------------------

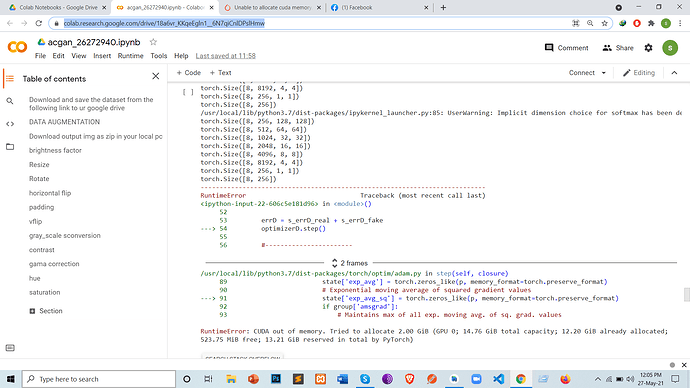

netD.zero_grad()

# train with real

s_output, c_output = netD(real_image)

s_errD_real = s_criterion(s_output, real_target)

c_errD_real = c_criterion(c_output, real_label)

errD_real = s_errD_real + c_errD_real

errD_real.backward()

D_x = s_output.data.mean()

# train with fake

s_output,c_output = netD(fake_image.detach())

s_errD_fake = s_criterion(s_output, fake_target) # realfake

c_errD_fake = c_criterion(c_output, real_label) # class

errD_fake = s_errD_fake + c_errD_fake

errD_fake.backward()

D_G_z1 = s_output.data.mean()

errD = s_errD_real + s_errD_fake

optimizerD.step()

#-----------------------

# Update Generator

#-----------------------

netG.zero_grad()

s_output,c_output = netD(fake_image)

s_errG = s_criterion(s_output, real_target) # realfake

c_errG = c_criterion(c_output, real_label) # class

errG = s_errG + c_errG

errG.backward()

D_G_z2 = s_output.data.mean()

optimizerG.step()

# Save Losses for plotting later

G_losses.append(errG.item())

D_losses.append(errD.item())

iters += 1

# scheduler.step(errD.item())

print('[%d/%d][%d/%d]\nLoss_D: %.4f\tLoss_G: %.4f\nD(x): %.4f\tD(G(z)): %.4f / %.4f'

% (epoch+1, num_epochs, i+1, len(dataloader),

errD.item(), errG.item(), D_x, D_G_z1, D_G_z2))

show_generated_img(num_show)

# --------- save fake image ----------

fake_image = netG(fixed_noise_label)

vutils.save_image(fake_image.detach(), '{}/fake_samples_epoch_{:03d}.png'.format(outf, epoch + 1),

normalize=True, nrow=5)

fake_img_list.append(fake_image)

I have provided you the full code as well the drive link to access dataset.would you kindly check it?i am new in this platform as well in data science so i am having too many trouble to deal with these.sorry for taking your times and thank you so much for your every response.