Hi!

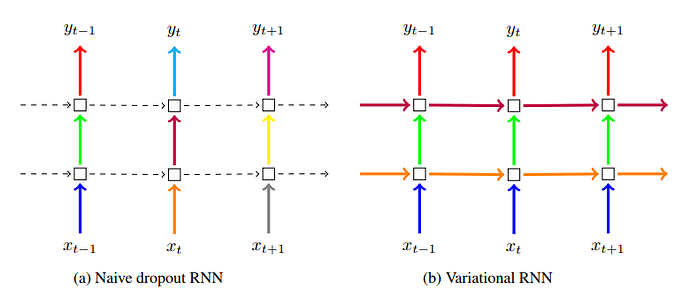

I’ve searched a bit on the forums and found this answer Dropout in LSTM - #3 by Nick_Young that says that the Dropout applied between layers on a LSTM is different on each timestep. Is there any implementation of LSTM or some snippet of code that would apply the same mask over every timestep between layers ? This is needed to theoretically satisfy the conditions on this paper https://arxiv.org/pdf/1512.05287.pdf.

In the image bellow, the squares would be LSTM cells. I need the connection between cells (the green arrow) to be masked by the same values!

Thanks!