I have encountered a very strange error with the datatypes. I am very new to PyTorch, I apologize if this is a simple mistake.

The minimal version of the error is:

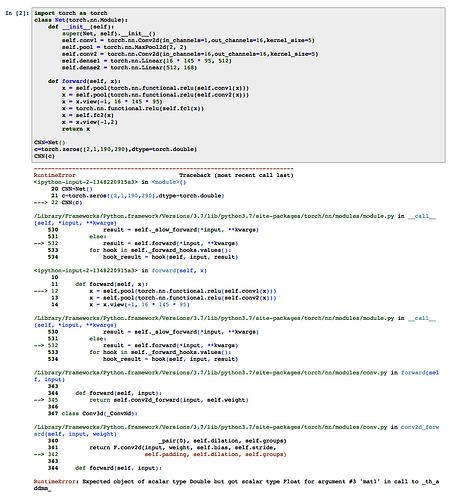

import torch as torch

class Net(torch.nn.Module):

def init(self):

super(Net, self).init()

self.conv1 = torch.nn.Conv2d(in_channels=1,out_channels=16,kernel_size=5)

self.pool = torch.nn.MaxPool2d(2, 2)

self.conv2 = torch.nn.Conv2d(in_channels=16,out_channels=16,kernel_size=5)

self.dense1 = torch.nn.Linear(16 * 145 * 95, 512)

self.dense2 = torch.nn.Linear(512, 168)

def forward(self, x):

x = self.pool(torch.nn.functional.relu(self.conv1(x)))

x = self.pool(torch.nn.functional.relu(self.conv2(x)))

x = x.view(-1, 16 * 145 * 95)

x = torch.nn.functional.relu(self.fc1(x))

x = self.fc2(x)

x = x.view(-1,2)

return x

CNN=Net()

c=torch.zeros((2,1,190,290),dtype=torch.double)

CNN(c)

I have tried all different versions of: adding .float() to the network, adding .double() to the inputs, etc. They all didn’t change the error message.

The full error message is displayed below.

Thank you so much in advance.

-Core Park

===== HYPERPARAMETERS =====

batch_size= 32

epochs= 5

learning_rate= 0.001

torch.DoubleTensor

RuntimeError Traceback (most recent call last)

in ()

1 CNN = Net()

----> 2 trainNet(CNN, batch_size=32, n_epochs=5, learning_rate=0.001)

in trainNet(net, batch_size, n_epochs, learning_rate)

38 #Forward pass, backward pass, optimize

39 print(inputs.type())

—> 40 outputs = net(inputs)

41 loss_size = loss(outputs, labels)

42 loss_size.backward()

/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/site-packages/torch/nn/modules/module.py in call(self, *input, **kwargs)

530 result = self._slow_forward(*input, **kwargs)

531 else:

→ 532 result = self.forward(*input, **kwargs)

533 for hook in self._forward_hooks.values():

534 hook_result = hook(self, input, result)

in forward(self, x)

9

10 def forward(self, x):

—> 11 x = self.pool(torch.nn.functional.relu(self.conv1(x)))

12 x = self.pool(torch.nn.functional.relu(self.conv2(x)))

13 x = x.view(-1, 16 * 145 * 95)

/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/site-packages/torch/nn/modules/module.py in call(self, *input, **kwargs)

530 result = self._slow_forward(*input, **kwargs)

531 else:

→ 532 result = self.forward(*input, **kwargs)

533 for hook in self._forward_hooks.values():

534 hook_result = hook(self, input, result)

/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/site-packages/torch/nn/modules/conv.py in forward(self, input)

343

344 def forward(self, input):

→ 345 return self.conv2d_forward(input, self.weight)

346

347 class Conv3d(_ConvNd):

/Library/Frameworks/Python.framework/Versions/3.7/lib/python3.7/site-packages/torch/nn/modules/conv.py in conv2d_forward(self, input, weight)

340 _pair(0), self.dilation, self.groups)

341 return F.conv2d(input, weight, self.bias, self.stride,

→ 342 self.padding, self.dilation, self.groups)

343

344 def forward(self, input):

RuntimeError: Expected object of scalar type Double but got scalar type Float for argument #3 ‘mat1’ in call to th_addmm