I will post a link to the minimal reproduction code, but the env is really simple.

(link: Minimal PPO training environment (gist.github.com))

A flat 2D world [0,1]×[0,1] has an agent and a target destination it needs to get to.

Thus the observation space is continuous and has shape (4,) {x0,y0,x1,y1}.

The action space is essentially a direction to step in, and how large of a step to make.

Neither of these actions compound with the previous actions, they stand on their own.

Both actions are in the range [-1,1]. Thus the action space is continuous and has shape (2,).

Location is clipped at the world boundaries to be within [0,1] as well.

I’ve tried running the exact same PPO method to train the BipedalWalker-v3 gym env, and it performs extremely well and steadily improves the average reward until the environment is essentially solved.

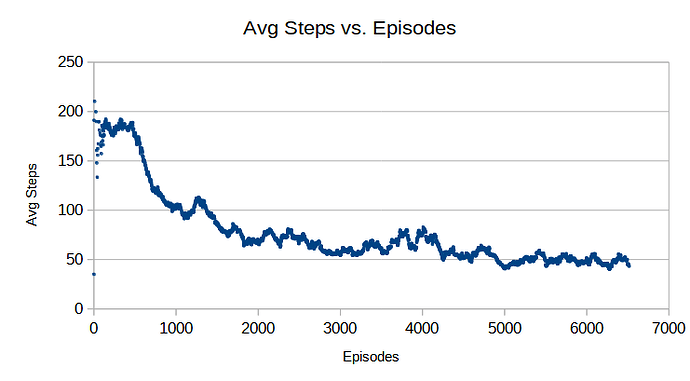

However, switching over to my environment, it performs abysmally.

After 1 million time steps (much longer than the bipedalwalker needs for good results) it only reaches the destination less than half the time, and even then, most of them look like it reached it by accident.

The other half the time it will just burrow itself in a corner, or try to go through the world border even though it’s clipped and doesn’t get anywhere.

I’d think my environment is dramatically simpler and easier to learn, so why does it not work at all?

I’ve tested it can go in all direction, and always reach its target well within the step limit if it wanted to. Episode is truncated after 500 time steps with a -10 reward, +10 reward for reaching the target, and otherwise, the reward is just -dist where dist is the distance from the agent to the target.

Even a random sample (random walk) reaches the target relatively often.

What is wrong with my environment / approach?