I have two models whose performance I am comparing, a ResNet-9 model and a VGG-16 model. They are being used for image classification. Their accuracies on the same test set are:

ResNet-9 = 99.25% accuracy

VGG-16 = 97.90% accuracy

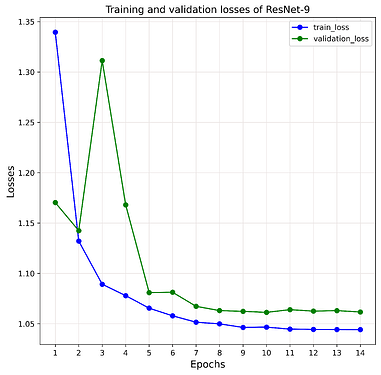

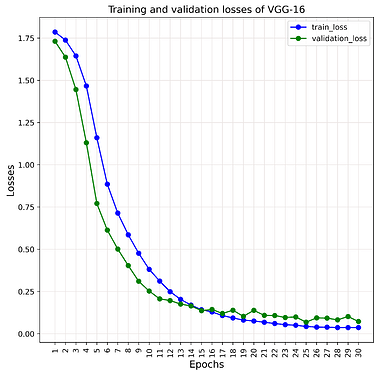

However from their loss curves during training, shown in the images, I see that the VGG-16 has lower losses as compared to ResNet-9 which has higher losses.

I am using torch.nn.CrossEntropyLoss() for both VGG-16 and ResNet-9. I would have expected the ResNet-9 to have lower losses (because it performs better on the test set) but this is not the case.

Is this observation normal?