I came across this paper but I wonder if I am using the PyTorch transfer learning tutorial, a code like

How can I apply this kinds of attention methodology to it since I don’t have access to the full network and I am using a model pretrained on ImageNet.

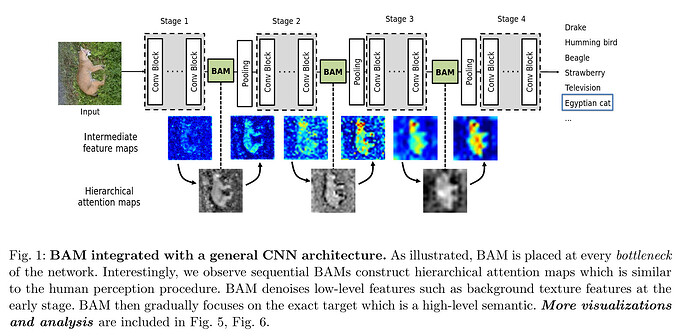

^ this is from this paper: Park, Jongchan, Sanghyun Woo, Joon-Young Lee, and In So Kweon. “A simple and light-weight attention module for convolutional neural networks.” International Journal of Computer Vision 128, no. 4 (2020): 783-798.

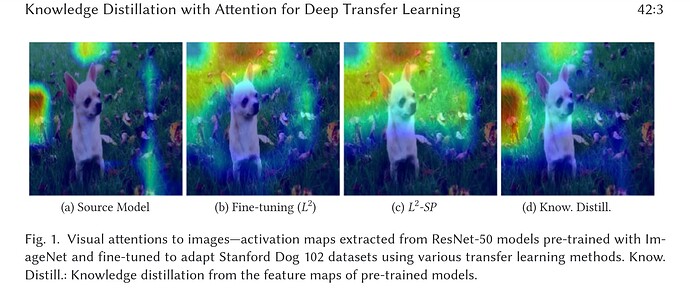

Also, Li, Xingjian, Haoyi Xiong, Zeyu Chen, Jun Huan, Ji Liu, Cheng-Zhong Xu, and Dejing Dou. “Knowledge Distillation with Attention for Deep Transfer Learning of Convolutional Networks.” ACM Transactions on Knowledge Discovery from Data (TKDD) 16, no. 3 (2021): 1-20.

is another example of what I am interested in for transfer learning using a pre-trained model.

I am open to any method such as GradCAM or RISE that could be applicable to visualizing the saliency/attention map in the transfer learning paradigm. If there are such existing notebooks for a starter you could point me to would be really great. (Fig 1.d)