I would like to know how this can be performed in Pytorch.

######################### [Convolution] -> [Batch Normalization] -> [ReLU] ##################################################

def Conv_block2d(in_channels,out_channels,*args,**kwargs):

return nn.Sequential(nn.Conv2d(in_channels,out_channels,*args,**kwargs,bias=False),nn.BatchNorm2d(out_channels,eps=0.001),nn.ReLU(inplace=True))

#############################################################################################################################

############################## Main Model ##########################################################################################

class MainBlock(nn.Module):

"""docstring for MainBlock"nn.Module"""

def __init__(self):

super(MainBlock,self).__init__()

"""

size = [3,15,33,3,3]

for in_c,out_c,k in zip(size[:2],size[1:3],size[3:]):

print("in channel",in_c,"out channel",out_c,"kernel size",k)

[Convolution] -> []

in channel 3 out channel 15 kernel size 3

(256x256x3) * (3x3x15) = (254x254x15))*(2x2x1) = (127x127x18)

in channel 15 out channel 33 kernel size 3

(127x127x15) * (3x3x33) = (127x127x33))*(2x2x1) = (62x62x33)

"""

self.block1_size = [3,15,33,3,3]

self.block1_out = [nn.Sequential(*Conv_block2d(in_c,out_c,kernel_size=k),nn.MaxPool2d(2,2)) for in_c,out_c,k in zip(self.block1_size[:2],self.block1_size[1:3],self.block1_size[3:])]

self.block1_output = nn.Sequential(*self.block1_out)

def forward(self,x):

# (62x62x33)

x = self.block1_output(x)

return x

After training the Neural Network

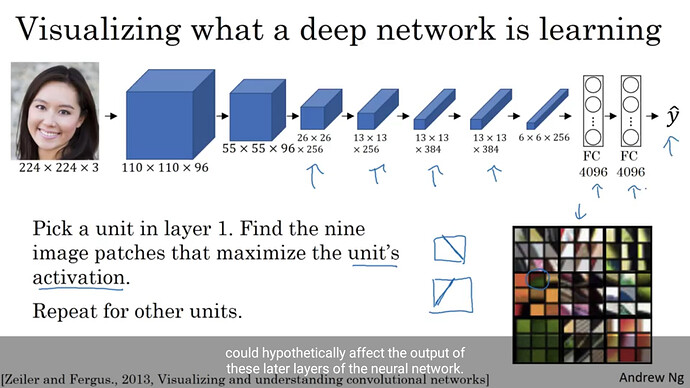

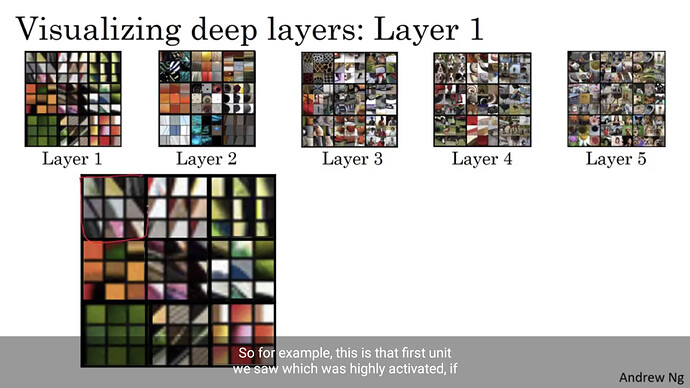

How do i detect the and store image patches that mamimizes the units activation in that specific layer as show in images below?