I’m trying to plot the bounding boxes of a few sample images from the VOC Dataset. The bounding boxes are in the form (x_mid, y_mid, width, height) and they are all fractional (fraction of the original image width and height).

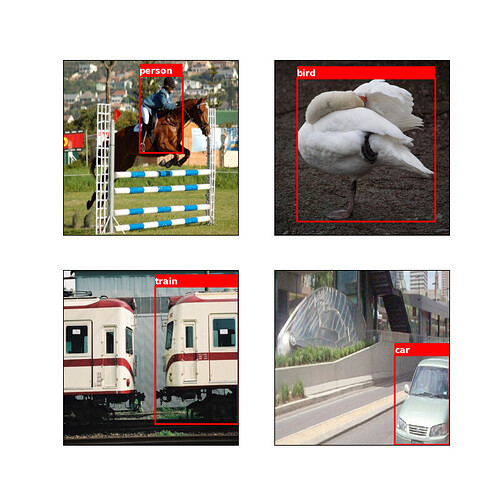

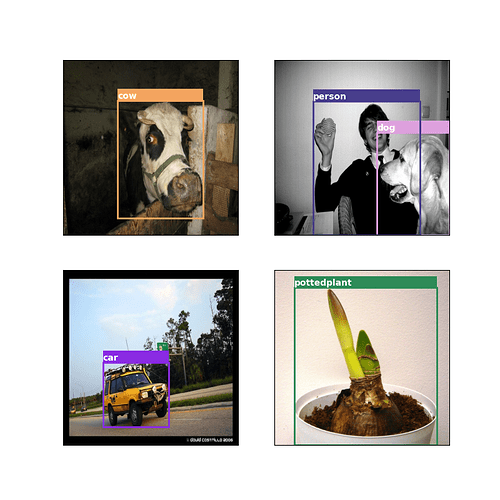

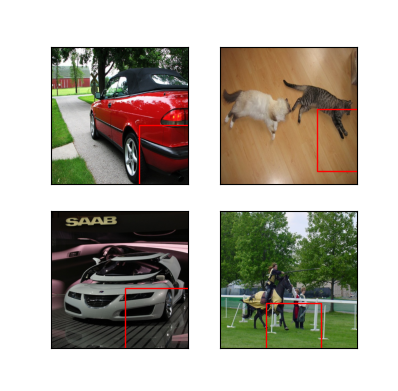

My plot currently looks like this and I don’t know why. It could be because I need to adjust the labels (since the images are being resized), but my understanding is that I don’t, since they are fractions and not absolute values. But I’m not sure.

A few notes:

- The csv file is a list of (img_file_name.jpg, labels_file_name.txt) entries

- The labels are of the form (class, x_mid, y_mid, width, height)

- There could be multiple labels / bounding boxes. My script just looks at the very first bounding box of each data point.

And here’s the code

import torch

import matplotlib.pyplot as plt

import matplotlib.patches as patches

from torch.utils.data import Dataset, DataLoader

from PIL import Image

from torchvision import transforms

import os

import pandas as pd

class VOCDataset(Dataset):

"""Some Information about VOCDataset"""

def __init__(self, annotations_path, img_dir, label_dir, transforms, reshape_height, reshape_width):

self.annotations = pd.read_csv(annotations_path)

self.img_dir = img_dir

self.label_dir = label_dir

self.transforms = transforms

self.new_height = reshape_height

self.new_width = reshape_width

super(VOCDataset, self).__init__()

def __getitem__(self, index):

img_file_name = self.annotations.iloc[index][0];

img_file_path = os.path.join(self.img_dir, img_file_name)

img = Image.open(img_file_path)

# original_height, original_width = img.size

# HEIGHT_RATIO, WIDTH_RATIO = self.new_height / original_height, self.new_width / original_width

# print("Old shape: ", original_height, original_width)

# print("New shape: ", self.new_height, self.new_width)

# print(HEIGHT_RATIO, WIDTH_RATIO)

tensor_img = self.transforms(img)

label_file_name = self.annotations.iloc[index][1];

label_file_path = os.path.join(self.label_dir, label_file_name)

label = list()

with open(label_file_path, "r") as f:

line = f.readlines()[0]

label = [int(float(i)) if int(float(i)) == float(i) else float(i) for i in line.split(" ")]

# label[1] *= WIDTH_RATIO

# label[2] *= HEIGHT_RATIO

# label[3] *= WIDTH_RATIO

# label[4] *= HEIGHT_RATIO

# print(labels[0])

return tensor_img, torch.tensor(label)

def __len__(self):

return len(self.annotations)

def visualize_imgs(imgs, label, rows, cols):

imgs = imgs.detach().numpy().transpose(0, 2, 3, 1)

label = label.detach().numpy()

fig = plt.figure(figsize=(5, 5))

for i in range(imgs.shape[0]):

ax = fig.add_subplot(rows, cols, i + 1, xticks=[], yticks=[])

x_mid, y_mid, width, height = [448 * i for i in label[i][1:]]

rect = patches.Rectangle((x_mid, y_mid), width, height, edgecolor='r', facecolor='none')

ax.imshow(imgs[i])

ax.add_patch(rect)

plt.show()

if __name__ == '__main__':

IMG_DIR = "G:/dl_projects/yolov3_aladdin/PASCAL_VOC/images"

LABEL_DIR = "G:/dl_projects/yolov3_aladdin/PASCAL_VOC/labels"

BATCH_SIZE = 4

NUM_WORKERS = 1

PIN_MEMORY = False

TRANSFORMS = transforms.Compose([

transforms.Resize((448, 448)),

transforms.ToTensor(),

])

train_dataset = VOCDataset(

"G:/dl_projects/yolov3_aladdin/PASCAL_VOC/8examples.csv",

img_dir=IMG_DIR,

label_dir=LABEL_DIR,

transforms=TRANSFORMS,

reshape_height=448,

reshape_width=448

)

train_loader = DataLoader(

dataset=train_dataset,

batch_size=BATCH_SIZE,

num_workers=NUM_WORKERS,

pin_memory=PIN_MEMORY,

shuffle=True,

drop_last=True,

)

it = iter(train_loader)

imgs, labels = next(it)

visualize_imgs(imgs, labels, rows=2, cols=2)