Hi, I’m trying to warp an image using a flow map(calculated using FlowNet2). I have read other similar posts in the forum which suggested using torch.nn.functional.grid_sample. I’ve never used this function before so I tried doing one based on how PWCNet used it PWC-Net/PyTorch/models/PWCNet.py at master · NVlabs/PWC-Net · GitHub

I modified it a bit to fit my data dimension but they’re basically the same implementation.

def warp(x,flo):

B,H,W,C = x.size()

# mesh grid

xx = torch.arange(0,W).view(1,-1).repeat(H,1)

yy = torch.arange(0,H).view(-1,1).repeat(1,W)

xx = xx.view(1,H,W,1).repeat(B,1,1,1)

yy = yy.view(1,H,W,1).repeat(B,1,1,1)

grid = torch.cat((xx,yy),3).float()

if x.is_cuda:

grid = grid.cuda()

vgrid = Variable(grid) + flo

## scale grid to [-1,1]

vgrid[:,:,:,0] = 2.0*vgrid[:,:,:,0].clone()/max(W-1,1)-1.0

vgrid[:,:,:,1] = 2.0*vgrid[:,:,:,1].clone()/max(H-1,1)-1.0

x = x.permute(0,3,1,2)

output = torch.nn.functional.grid_sample(x,vgrid)

mask = torch.autograd.Variable(torch.ones(x.size()))

mask = torch.nn.functional.grid_sample(mask,vgrid)

mask[mask<0.9999]=0

mask[mask>0]=1

return output*mask

I ran the FlowNet2 to get the optical flow from the first image to the second image and then warped them using the function above. (Apparently, new users can only post one image so I can’t post the picture I’m using but it’s from the Berkeley Deep Drive Dataset)

def show(img):

npimg = img.numpy()

plt.imshow(np.transpose(npimg,(1,2,0)),interpolation='nearest')

plt.show()

img0 = cv2.imread("val/picture0013.png").astype(np.float32)

img0 = np.array([img0])

flow = read_flow("work/inference/run.epoch-0-flow-field/000000.flo")

flow = np.array([flow])

img0 = torch.from_numpy(img0)

flow = torch.from_numpy(flow)

result2 = warp(img0,flow)

show(result2[0])

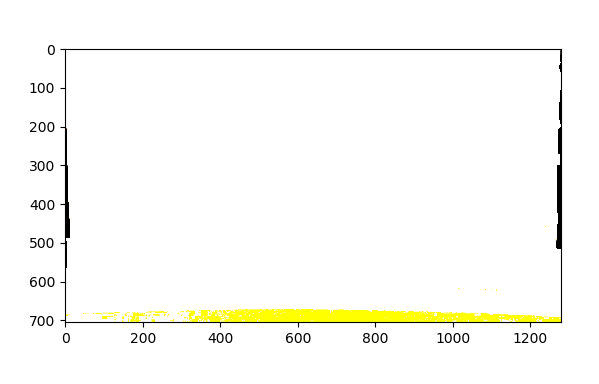

This is what I got

Am I misunderstanding what grid_sample does??