Hi @tom !!

thanks for adding the functions from the Python Optimal Transport library, they’re definitely going to help save some time testing your implementation - which I think is fine.

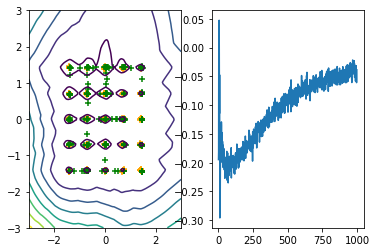

I was wondering if you’re interested in applying your PyTorch Wasserstein loss layer code to reproducing the noisy label example in appendix E of Learning with a Wasserstein Loss, (Frogner, Zhang, et al).

It’s a small toy problem, but should be a nice steeping stone to test against before perhaps going on to tackle real datasets, something like the more complicated Flicker tags prediction task that (Frogner, Zhang et al), apply their Wasserstein Loss layer to?

Also there’s a toy dataset in scikit-learn that might be an alternative to the noisy label toy dataset, that (Frogner et al), use?

make_multiabel_classification

I’ve been doing some reading and to be honest, of the many different papers I’ve read on applying optimal transport to machine learning, the Frogner et al, Learning with a Wasserstein Loss, paper still seems to be the only one I vaguely understand, (in particular appendices C & D), and think I could ultimately reproduce, and then perhaps apply?

It seems a good idea to initially work with one-hot labels as that seems to simplify things incredibly, see appendix C of Frogner et al. If I understand correctly, in that special case, we don’t even need the SK algorithm, as there’s only one possible transport plan !!! I think that’s quite cute, and should be a lot of fun to see working  , given how simple it is?

, given how simple it is?

All the best,

Ajay

, given how simple it is?

, given how simple it is? Or maybe we find something to do with the original WGAN code, too.

Or maybe we find something to do with the original WGAN code, too.

). The simplest experiment in the Bengio paper is a character level language model using PennTree Bank, so I guess the best place to start is

). The simplest experiment in the Bengio paper is a character level language model using PennTree Bank, so I guess the best place to start is  )?

)?