Hello, I am using a code to classify real time image, But I am confused about it. Could you please take a look on the code and help me to figure out any error:

import numpy as np

import torch

import torch.nn as nn

import torchvision

from torch.autograd import Variable

from torchvision import transforms

import PIL

import cv2

#This is the Label

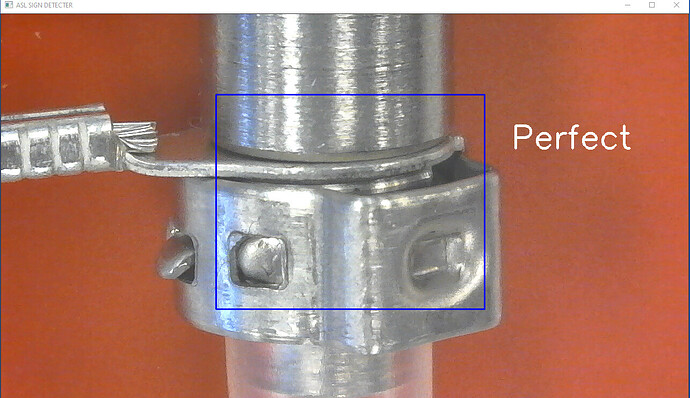

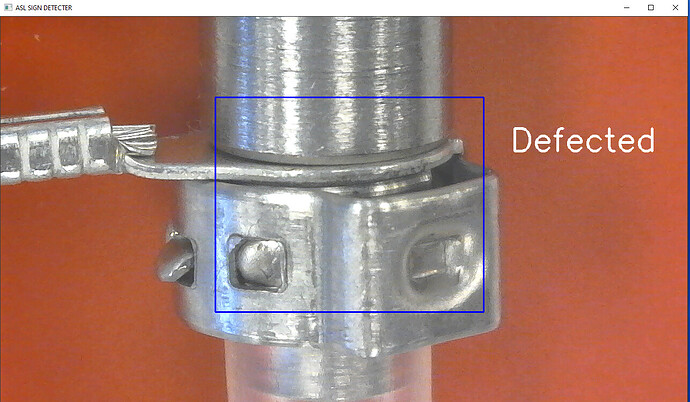

Labels = ['Perfect','Defected']

# Let's preprocess the inputted frame

data_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(size=(352, 288)),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

])

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# Load the model and set in eval

resnet18 = torchvision.models.resnet18(pretrained=True)

resnet18.fc = torch.nn.Sequential(nn.Linear(resnet18.fc.in_features,512),

nn.ReLU(),

nn.Dropout(),

nn.Linear(512, 2))

resnet18.load_state_dict(torch.load('defect_classifier_demo.pt', map_location=torch.device('cpu')))

resnet18.eval()

#Set the Webcam

def Webcam_720p():

cap.set(3,1280)

cap.set(4,720)

def preprocess(image):

image = PIL.Image.fromarray(image) #Webcam frames are numpy array format

#Therefore transform back to PIL image

print(image)

image = data_transforms(image)

image = image.float()

#image = Variable(image, requires_autograd=True)

image = image.cpu()

image = image.unsqueeze(0) #I don't know for sure but Resnet-50 model seems to only

#accpets 4-D Vector Tensor so we need to squeeze another

return image #dimension out of our 3-D vector Tensor

#Let's start the real-time classification process!

cap = cv2.VideoCapture(0) #Set the webcam

Webcam_720p()

fps = 0

show_score = 0

show_res = 'Nothing'

sequence = 0

while True:

ret, frame = cap.read() #Capture each frame

if fps == 4:

image = frame[100:450,150:570]

image_data = preprocess(image)

#print(image_data)

prediction = resnet18(image_data)[0]

torch.nn.functional.softmax(prediction, dim=0)

#result,score = argmax(prediction)

prediction = prediction.cpu().detach().numpy()

predicted_class_index = np.argmax(prediction)

predicted_class_name = Labels[predicted_class_index]

print(prediction)

print(predicted_class_index)

print(predicted_class_name)

#print(result)

#print(score)

#prediction = np.vectorize(prediction)

fps = 0

if prediction.any():

show_res = predicted_class_name

show_score= prediction

else:

show_res = "Nothing"

show_score = prediction

fps += 1

cv2.putText(frame, '%s' %(show_res),(950,250), cv2.FONT_HERSHEY_SIMPLEX, 2, (255,255,255), 3)

#cv2.putText(frame, '(score = %.5f)' %(show_score), (950,300), cv2.FONT_HERSHEY_SIMPLEX, 1,(255,255,255),2)

cv2.rectangle(frame,(400,150),(900,550), (250,0,0), 2)

cv2.imshow("ASL SIGN DETECTER", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyWindow("ASL SIGN DETECTER") ```