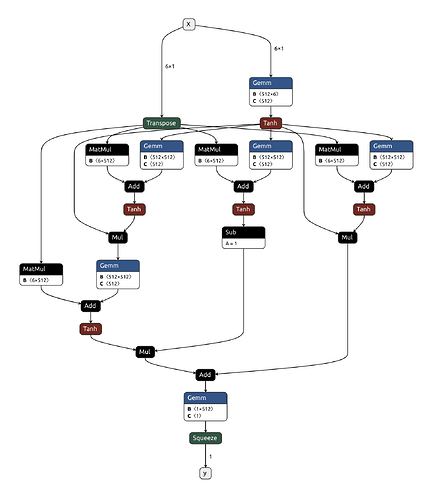

I constructed a custom model which looks like this (open the figure in a new tab). It is a combination of multiple classes.

The figure shows a single custom hidden layer (everything in between the input and output).

Finally I created a object using

DGM_model = DGMArch(len(input_tensor), len(output_tensor))

I wanted to implement Glorot normal initialisation (torch.nn.init.xavier_uniform_() ). But the DGM_model.weight is not available.

I can implement this by iterating over each parameter using this article.

How do I implement this inside the __init__() function of the DGMArch class? Here is my weight initialisation code for sequential models.

for i in range(len(layers)-1):

# weights from a normal distribution with

# Recommended gain value for tanh = 5/3?

nn.init.xavier_normal_(self.linears[i].weight.data, gain=1.0)

# set biases to zero

nn.init.zeros_(self.linears[i].bias.data)