How can we simply revise the parameters of a pretrained model in torchvision? For example, how can we revise each stride parameter of models.resnet101 in each layer? Can we set sth. just like model.conv1.stride=1?

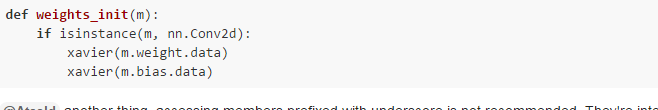

What I can see is to write down a new model, revise the weights of the pretrained model, and copy it to the new model by net.apply(weights_init). Is there any simpler way?

Why would you need to “revise” (edit, change?) a convolutional stride of a pre-trained model?

I’m not sure I understand what you are after…

I’d recommend recreating the model - this is going to work for sure. You probably could monkey-patch the strides with a new tuple (try seeing what’s the type of this attribute in the loaded model). However keep in mind that the model was trained with different parameters, it might now work too well after that change.

Change some parameters of a pretrained model, just like ‘Going Deeper on the Tiny Imagenet Challenge’ has done. The author changed the input image size. Consequently, the filter size has to be changed. I have to do similar things. Therefore, I asked the problems. O(∩_∩)O

Thank @apaszke for detailed explanation, and @Atcold for reminder.

Is this way include paramters in Batch normalization?

I don’t understand your question. Can you elaborate please?

I see your answer

It’s clear and useful. And is this method can also initialize parameters in batch normalization?

yes you can initialize batchnorm too. I think you should just try these things…

Hi @Hamid

I found that weight initialization can by done as below in the latest version of pytorch

import torch.nn.init as init

self.conv1 = nn.Conv2d(3, 20, 5, stride=1, bias=True)

init.xavier_uniform(self.conv1.weight, gain=np.sqrt(2.0))

init.constant(self.conv1.bias, 0.1)

As you said in this post that we can extract the specific parameters with conv1Params = list(net.conv1.parameters()) and we can access the kernel values in conv1Params[0] and the bias values in convParams[1]. Similarly we can extract the parameters of each conv layer like conv2Params, conv3Params, … Now i want to initialise conv1Params in [-2 2], conv2Params in [-5, 5], and so on. Could you please me. I’m new to PyTorch. I’m learning it step by step.

see the comments above, they answer your question including with code snippets.

Thanks for quick reply. Last night i have tried this one:

for m in net.modules():

if isinstance(m,nn.Conv2d):

m.weight.data.fill_(1)

m.bias.data.fill_(0)

And this is working fine for me. Thanks a lot.

What is the default mechanism for weight initialization? Is it uniform [0, 1)?

please see: torch/nn/modules/conv.py#L39-46

def reset_parameters(self):

n = self.in_channels

for k in self.kernel_size:

n *= k

stdv = 1. / math.sqrt(n)

self.weight.data.uniform_(-stdv, stdv)

if self.bias is not None:

self.bias.data.uniform_(-stdv, stdv)Where is the xavier function coming from? We should define it by ourselves?

I think in the conversation above it was used as a placeholder to specify the initialization strategy. Its defined here:

http://pytorch.org/docs/nn.html#torch.nn.init.xavier_normal

Thank you.

But my pytorch gives an error: “AttributeError: ‘module’ object has no attribute ‘init’”

Actually, I have updated the pytorch to the latest, and I have checked the /usr/local/lib/python2.7/dist-packages/torch/nn has init.py

Does anyone know how can I use the init?

Best,

Dong Nie

I see. To import torch.nn is not enough, I have to import torch.nn.init

Thanks.

Yes.

Using methods from torch.nn.init is more simple and convenient.

When I do this for a recurrent module, why do I hit the python interpreter’s maximum recursion depth ?