Hello everyone, I’m kinda new to ML and CV and I’ve been training a semantic segmentation model for my master thesis. My model stagnates after 20ish epochs which it does not with CrossEntropyLoss. Also when testing out my model it only ever predicts the first 3 out of 9 classes. 2 of those classes are predominate in my dataset while one is actually relatively seldom.

This is my code for weighted dice loss:

class ClassAverageDiceLoss(nn.Module):

def __init__(self, num_classes, softmax_dim=None):

super().__init__()

self.num_classes=torch.tensor(num_classes)

self.softmax_dim=softmax_dim

def forward(self, logits, targets, reduction='mean', smooth=1e-5):

probabilities=logits

if self.softmax_dim is not None:

probabilities = nn.Softmax(dim=self.softmax_dim)(logits)

#end if

targets_one_hot=torch.nn.functional.one_hot(targets, num_classes=self.num_classes)

# Convert from NHWC to NCHW

targets_one_hot = targets_one_hot.permute(0, 3, 1, 2)

dice_coeff_individual_array=torch.zeros(self.num_classes, dtype=torch.float32).to(DEVICE)

#calcuate class specific dice loss/score

for n_class in range(self.num_classes):

targets_one_hot_class_specific=targets_one_hot[:,n_class,:,:]

probabilities_class_specific=probabilities[:,n_class,:,:]

intersection_class_specific = (targets_one_hot_class_specific * probabilities_class_specific).sum()

mod_a = intersection_class_specific.sum()

# .numel() and -log() in combination, due to the loss function always trying to minimize something

mod_b = targets_one_hot_class_specific.numel()

dice_coeff_individual = 2. * intersection_class_specific / (mod_a + mod_b + smooth)

dice_coeff_individual_array[n_class] = dice_coeff_individual

dice_coeff_average=dice_coeff_individual_array.sum()/(self.num_classes)

dice_loss_average=-dice_coeff_average.log()

return dice_loss_average

I thought that dice loss was good at handling imbalanced datasets which is why I was trying that out. If somebody could check my code for the weighted dice loss, I would be really thankful!

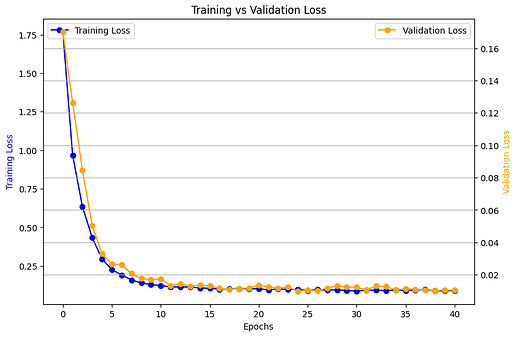

Here a graph of my training vs validation loss