I have a dataset that is unbalanced. The positive class in the training set has 658 samples and the negative class has 7301 samples, giving a 8.3% / 91.7% data distribution. Reading some threads in here, I used WeightedRandomSampler to oversample the minority class while undersampling the majority class. My dummy implementation is like this:

pathology='Atelectasis'

train_df,valid_df=get_df_brax(root,pathology)

batch_size=256

np.random.seed(0)

torch.manual_seed(0)

class_counts_train=train_df[pathology].value_counts()

sample_weights_train=[1/class_counts_train[i] for i in train_df[pathology].values]

transf_neg=transforms.Compose([transforms.Resize((256,256)),transforms.ToTensor()])

transf_pos_t=transforms.Compose([transforms.Resize((256,256)),

transforms.RandomResizedCrop(size=256,scale=(0.7,1.0)),

transforms.RandomAffine(degrees=0,translate=(0.1,0.1)),

transforms.ColorJitter(brightness=0.3,contrast=0.3,saturation=0.2),

transforms.ToTensor()])

sampler_train=torch.utils.data.WeightedRandomSampler(weights=sample_weights_train,num_samples=len(train_df),replacement=True)

trainset=Dataset_BRAX_(train_df,root,transf_neg,transf_pos_t,pathology)

validset=Dataset_BRAX_(valid_df,root,transf_neg,transf_pos_t,pathology)

trainloader=DataLoader(trainset,batch_size,sampler=sampler_train)

validloader=DataLoader(validset,batch_size)

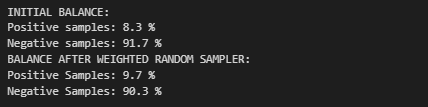

After applying this, and running for 100 epochs, the new distribution doesn’t change by that much. I wasn’t expecting to have an exact 50% positive /50% negative distribution, since the sampler is random, but the new distribution is only 9.7% positive / 90.3%. Here is the code I used to check the distributions:

count_pos=0

count_neg=0

class_counts_train=train_df[pathology].value_counts()

print('INITIAL BALANCE:')

print("Positive samples: {0:2.1f} %".format(class_counts_train[1]/class_counts_train.sum()*100))

print("Negative samples: {0:2.1f} %".format(class_counts_train[0]/class_counts_train.sum()*100))

epochs=100

for epoch in range(epochs):

for labels in trainloader:

for i in labels:

if i==1:

count_pos+=1

else:

count_neg+=1

print('BALANCE AFTER WEIGHTED RANDOM SAMPLER:')

print('Positive Samples: {0:2.1f} %'.format((count_pos/(count_pos+count_neg))*100))

print('Negative Samples: {0:2.1f} %'.format((count_neg/(count_pos+count_neg))*100))

This is what this code prints out:

Am I doing anything wrong? I also tried using artificial weights, and even using 0 as the weight for the negative class, but the data distribution is never near 50% positive / 50% negative.

Thanks for your help.