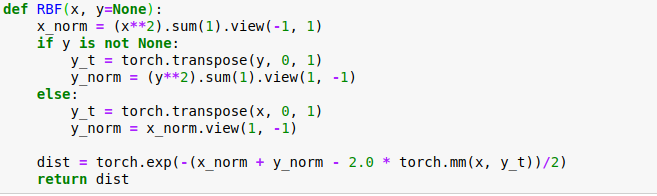

Could you explain a bit more which weight you are trying to update?

x receives gradients, so the error might come from another code:

x = torch.randn(10, 10, requires_grad=True)

out = RBF(x)

out.mean().backward()

print(x.grad)

> tensor([[ 5.9646e-04, 6.2076e-04, 9.4101e-06, 9.5977e-04, -4.9045e-05,

-4.4832e-04, 5.2261e-04, -5.3574e-05, -4.8484e-04, 7.1946e-04],

[ 7.1239e-04, 2.4813e-03, 2.5732e-04, -2.6018e-03, -1.8188e-03,

5.4736e-04, 1.8246e-03, 2.7148e-03, 5.1855e-03, 2.2654e-03],

[ 1.8962e-06, 2.6450e-06, -1.2890e-06, 3.7439e-06, 1.8524e-06,

7.6694e-07, 3.2894e-06, 4.0270e-06, 1.0571e-06, -5.2005e-06],

[-4.4542e-04, -2.6123e-04, -1.0615e-04, 2.2132e-05, 7.0672e-04,

-1.4883e-04, -5.1615e-05, 1.3554e-03, -9.1667e-04, 4.5033e-04],

[-1.9192e-04, -2.4983e-03, -1.2079e-03, 9.6558e-04, 3.0117e-03,

9.4145e-05, -2.0331e-03, -4.3258e-03, -3.5529e-03, -3.5782e-03],

[-8.3819e-08, -5.6494e-06, -3.6694e-07, 2.4759e-06, -9.0338e-07,

4.9472e-06, 1.8552e-06, 2.4289e-06, 2.5406e-06, 3.3993e-07],

[-4.6566e-10, -2.2352e-08, -2.0675e-07, 4.9360e-08, 1.0803e-07,

2.7195e-07, -3.1292e-07, 1.8999e-07, 6.0536e-08, 7.8697e-08],

[-6.8732e-05, -3.3617e-04, 3.3371e-04, 6.3260e-04, 8.8942e-06,

-1.0545e-04, 2.2346e-04, -2.1920e-04, 7.0792e-05, 2.6153e-04],

[ 2.2489e-04, -8.6654e-04, 7.7004e-04, -3.2373e-06, -1.6274e-03,

-3.3448e-04, -9.2118e-05, 1.8562e-04, -2.4125e-04, 5.2206e-04],

[-8.2948e-04, 8.6329e-04, -5.4566e-05, 1.8646e-05, -2.3319e-04,

3.8959e-04, -3.9872e-04, 3.3613e-04, -6.4229e-05, -6.3574e-04]])