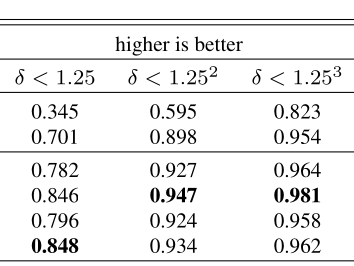

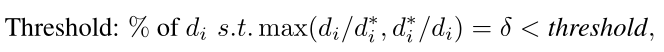

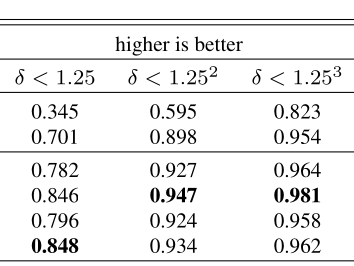

I read a paper where δ < 1.25, δ < 1.25², δ 1.25³ are given.

Since I do not really know for what they stand for …

My question would be:

- For what exactly do the values stand for?

- Why do we want to get/compair those values?

- How to get those values?

Thanks a lot for your help!

I believe this metric is used in depth estimation use cases comparing the amount of output pixels with a small, medium, and large difference from their ground truth value.

However, I don’t know how these values were defined.

1 Like

I additionally found this one:

But I still can’t figure it out, what it means.

Any idea?