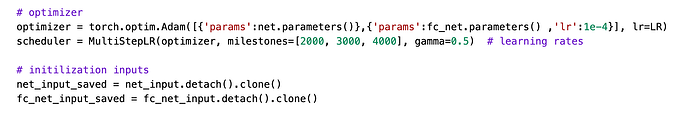

Hi I am new to pytorch, this is a piece of code. Basiclly net and fc_net is the nets we want to train. The net_input, fc_net_input are generated by other Network let call it net_pretrain. I don’t quite understand why we need to detach().clone() here. since the optimizer does not include parameter of net_pretrain

detach() cuts away backprop graph for previous network (this is needed when net_input.grad_fn is not None, and is not related to optimizer)

clone() duplicates storage, avoiding potential problems with inplace operations.

It is possible that these were added just to be safe.

1 Like

I think that in this case, the clone() is used to make sure that you have different memory. And so inplace modification of one won’t change the other.

The detach() is use to avoid tracking gradient information between the two so that uses of net_input_saved won’t affect the gradients of net_input.

1 Like