yiftach:

nsor instead of saving to a file (you will not need to alter it). Try to see if this still happens for a small tensor, and if so - print the input too, so we can have a code snippet we can run to reproduce and study the

@yiftach

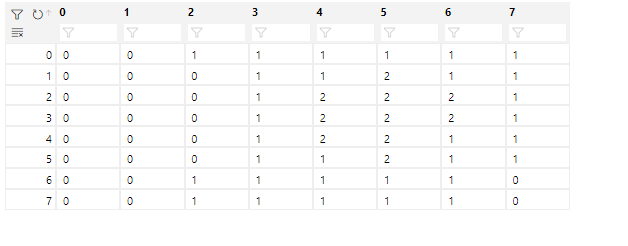

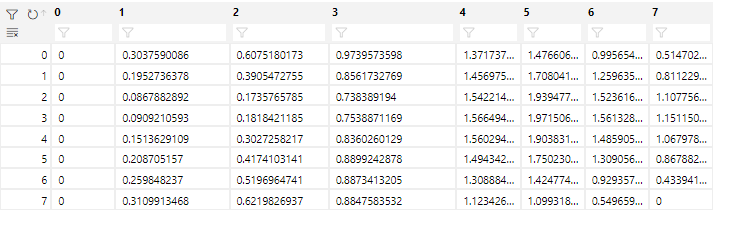

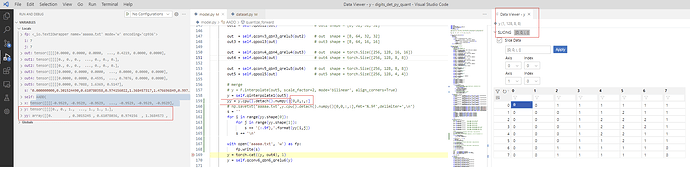

Please check the data I printed.

I saw that the y in vscode is round-off, however, the printed y is correct.

print x

tensor([[[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

...,

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]]]],

device='cuda:0', grad_fn=<FakeQuantizeBackward>)

print y

tensor([[[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

...,

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]],

[[0.0000, 0.3015, 0.6108, ..., 1.4767, 0.9974, 0.5180],

[0.0000, 0.1933, 0.3943, ..., 1.7086, 1.2602, 0.8118],

[0.0000, 0.0850, 0.1701, ..., 1.9406, 1.5231, 1.1056],

...,

[0.0000, 0.2087, 0.4175, ..., 1.7473, 1.3066, 0.8659],

[0.0000, 0.2629, 0.5180, ..., 1.4226, 0.9278, 0.4330],

[0.0000, 0.3093, 0.6185, ..., 1.0979, 0.5489, 0.0000]]]],

device='cuda:0', grad_fn=<FakeQuantizeBackward>)