Hi, dear former colleagues!

What is ~1.4GB CPU memory jump when call torch.distributed.barrier?

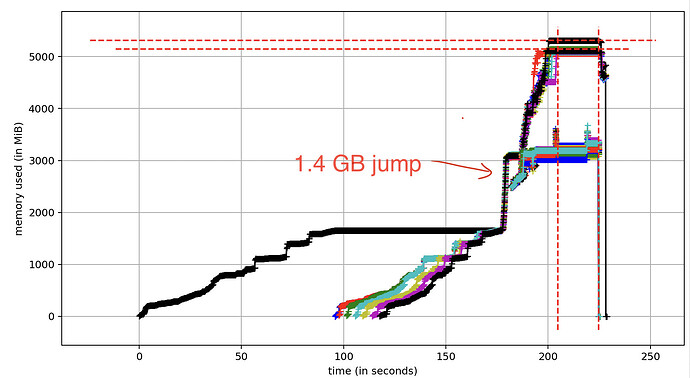

Tested with 1 and 8 GPUs, PT 1.9.0 and 1.12.1:

import torch

from memory_profiler import profile

@profile

def test():

print("before")

torch.distributed.barrier()

print("after")

torch.distributed.init_process_group(backend='nccl', rank=0, world_size=1, init_method='tcp://localhost:23456')

test()

Output:

Line # Mem usage Increment Occurrences Line Contents

=============================================================

4 227.2 MiB 227.2 MiB 1 @profile

5 def test():

6 227.2 MiB 0.0 MiB 1 print("before")

7 1641.7 MiB 1414.5 MiB 1 torch.distributed.barrier()

8 1641.7 MiB 0.0 MiB 1 print("after")

Different training on 8 GPUs: