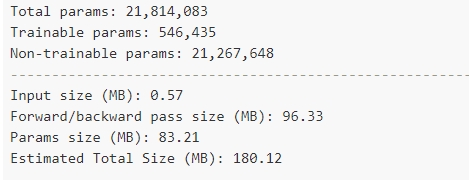

I use the pytorch’s summary library to summarize the size of your deep learning model.

There is a model with a small number of parameters, but a forward pass size is a large model. The forward pass size seems to be related to the speed of the model.

Probably I think that forward pass size means computed size. Is it the same as FLOPs?

I also wonder if the size of the model we are talking commonly about is the sum of both, or just the number of parameters. I am looking forward to your answer.