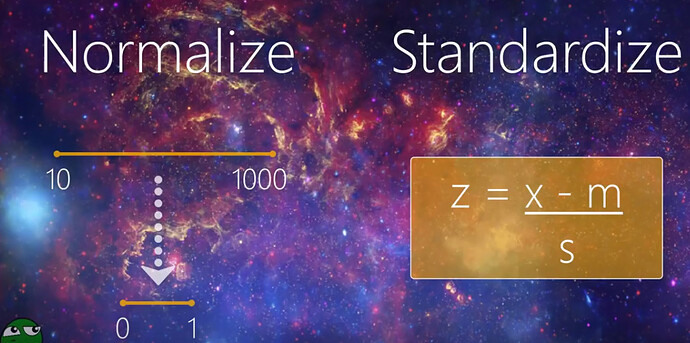

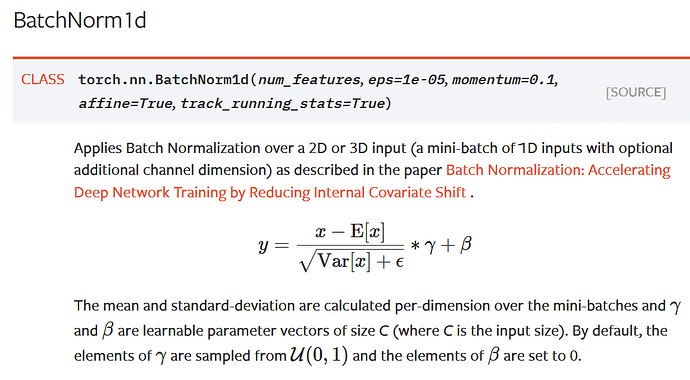

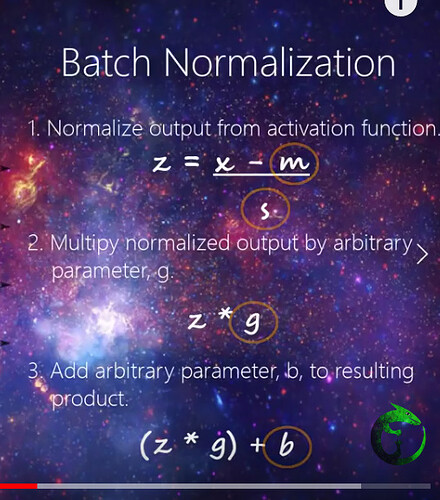

I am assuming with affine=True, the batch norm should be capable of learning all 4 parameters exactly. The mean, the standard deviation, the gamma, and the beta.

With affine=False this is not the case, in the example it states “without learnable parameters” so we have some predefined (hardcoded) batch normalization.

I am not sure, but the only parameter from the example m = nn.BatchNorm1d(100) is 100. Is this the batch size?

This is modified example from before…

import torch

import torch.nn as nn

# With Learnable Parameters

m = nn.BatchNorm1d(10)

# Without Learnable Parameters

m = nn.BatchNorm1d(10, affine=False)

input = 1000* torch.randn(3, 10)

print(input)

output = m(input)

print(output)

And it provides the output:

tensor([[ 553.9385, 358.0948, -311.0905, 946.6582, 320.4365, 1158.4661,

610.9046, -300.3665, -730.4023, -432.1760],

[ -428.9000, -373.9978, 304.2072, -230.9816, 246.2242, 757.4435,

-489.5371, -2545.6099, 2042.6073, -763.0421],

[ -350.7178, 1166.3325, -511.8971, -1168.5955, 1719.1627, -95.6929,

1275.7457, 2684.2368, -1186.4659, -1935.6162]])

tensor([[ 1.4106, -0.0403, -0.3979, 1.2684, -0.6516, 1.0550, 0.1995, -0.1150,

-0.5413, 0.9479],

[-0.7929, -1.2041, 1.3742, -0.0925, -0.7612, 0.2882, -1.3122, -1.1632,

1.4021, 0.4350],

[-0.6177, 1.2444, -0.9763, -1.1759, 1.4128, -1.3431, 1.1128, 1.2782,

-0.8609, -1.3829]])

Looks like it will try to squeeze the outputs to (-1,1) range with as few as possible elements out of that range.

Any comments?