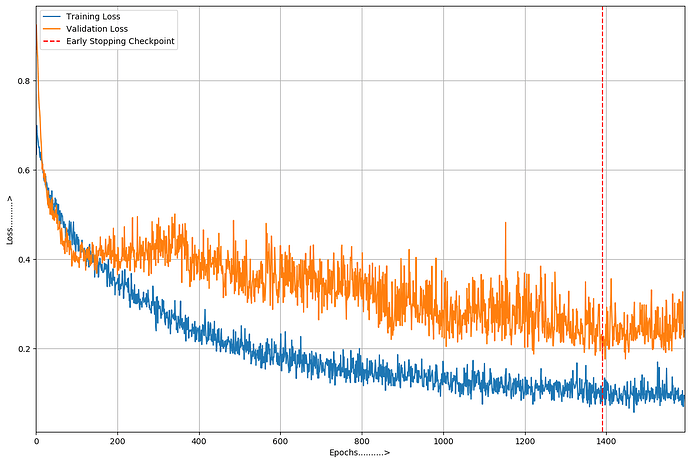

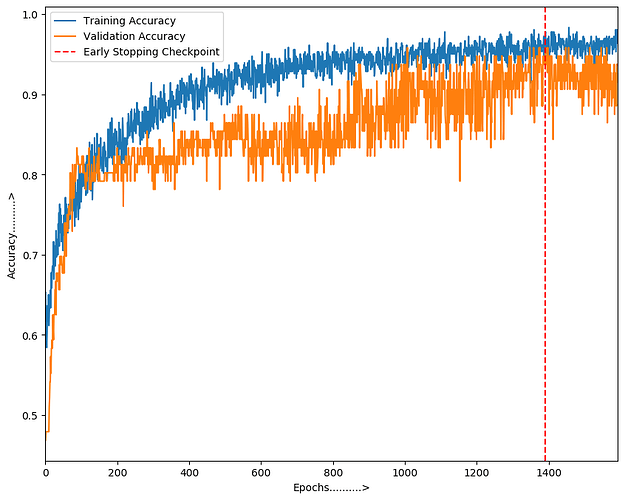

Here sometimes validation loss is less thain training loss.

From the graph, it seems your model is training without any issues. Both train and val accuracy is constantly increasing except after epoch > 1400. You can pretty much use the weights near epoch 1400.

Additionally to what @mailcorahul explained:

the validation loss might be lower than the training loss, e.g. if:

- dropout is used and the training model has less capacity than the validation model

- your data split is wrong and you have more “simple” examples in your validation set

Thank u for your reply.

@ptrblck @mailcorahul I am using Alexnet model. How to solve the problem (validation loss is lower than training loss)?

What happens if you disable the Dropout layers and have you checked the data distribution of your train and validation set?

@ptrblck I created 3 folder for train, val and test and randomly select 60% of image and keep it in train, 20% for val and 20% test.

How to disable dropout layers?

This is my code

feature_extract = True

sm = nn.Softmax(dim = 1)

def set_parameter_requires_grad(model, feature_extracting):

if feature_extracting:

for param in model.parameters():

param.requires_grad = True

def initialize_model(model_name, num_classes, feature_extract, use_pretrained=True):

if model_name == "alexnet":

""" Alexnet

"""

model= models.alexnet(pretrained=use_pretrained)

set_parameter_requires_grad(model, feature_extract)

num_ftrs = model.classifier[6].in_features

model.classifier[6] = nn.Linear(num_ftrs,num_classes)

input_size = 224

return model

model = initialize_model(model_name, num_classes, feature_extract, use_pretrained=True)