PyTorch Version: 1.11

I want to resume my learning rate after my training is terminated.

Here’s a toy example:

import torch.nn as nn

from torch.optim import SGD

from torch.optim.lr_scheduler import CosineAnnealingLR

n = 100

net = nn.Conv2d(3, 3, 1)

opt = SGD(net.parameters(), 0.1)

s = CosineAnnealingLR(opt, n, last_epoch=-1)

ckpt = "s.pt"

s_lr = []

for _ in range(s.last_epoch, n):

lr = s.get_last_lr()

s_lr.extend(lr)

s.step()

if _ == 10:

torch.save(s.state_dict(), ckpt)

Now I resume it by two ways. Note that my program is terminated. All object should be instanced again.

# Method 1

n = 100

net = nn.Conv2d(3, 3, 1)

opt = SGD(net.parameters(), 0.1)

ckpt = "s.pt"

state_dict = torch.load(ckpt)

s = CosineAnnealingLR(opt, n, last_epoch=state_dict["last_epoch"])

s.load_state_dict(state_dict)

s_lr = []

for _ in range(s.last_epoch, n):

lr = s.get_last_lr()

s_lr.extend(lr)

s.step()

print(s_lr[:3])

It throws an error param 'initial_lr' is not specified in param_groups[0] when resuming an optimizer. And

# Method 2

n = 100

net = nn.Conv2d(3, 3, 1)

opt = SGD(net.parameters(), 0.1)

ckpt = "s.pt"

state_dict = torch.load(ckpt)

s = CosineAnnealingLR(opt, n, last_epoch=-1)

s.load_state_dict(state_dict)

s_lr = []

for _ in range(s.last_epoch, n):

lr = s.get_last_lr()

s_lr.extend(lr)

s.step()

print(s_lr[:3])

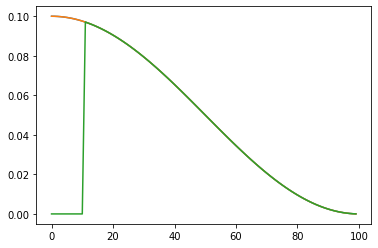

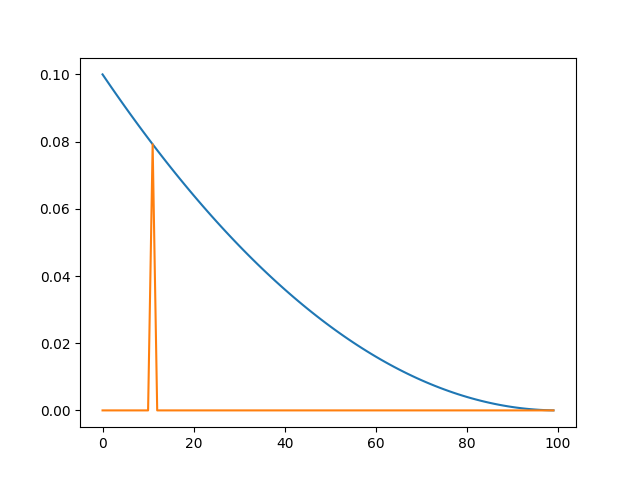

It print a wrong learning rate [0.09704403844771128, 0.09942787402278414, 0.09880847171860509], which 0.099 > 0.097.

What is the proper way of resuming a scheduler?

NOTE: I have checked What is the proper way of using last_epoch in a lr_scheduler? but it could not solve my problem.