Hi, everyone, I’m a beginner of Pytorch. Now, I can only know "the computation graph is freed after loss.backward()" from some blogs, so computation graph is still a vague concept for me.

after I run this simple program:

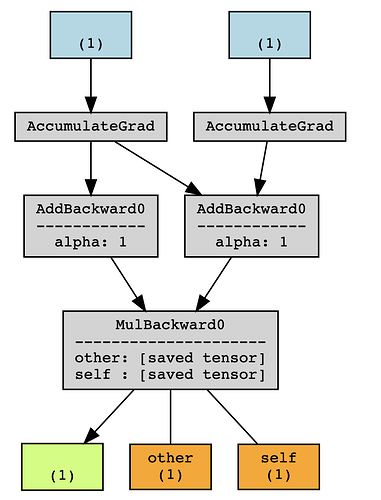

w = torch.tensor([1.], requires_grad=True)

x = torch.tensor([2.], requires_grad=True)

a = torch.add(w, x)

b = torch.add(w, 1)

y = torch.mul(a, b)

y.backward()

I can still access a and b, so I think the nodes are not freed, but then what is freed and how?

And it couldn’t be better if you are willing to start from the storage structure.

Thank you for your help