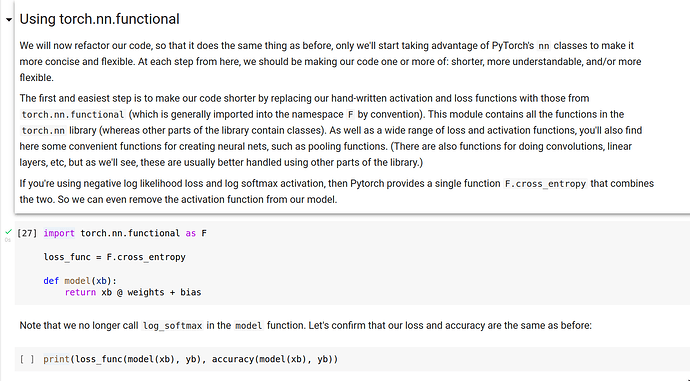

In the section where we replace the activation function with the built-in cross entropy function, why do we remove the activation function from the calculation of the prediction accuracy? I had thought that the activation function was still needed for calculating the predictions of the neural network. The screenshot is included below.

F.cross_entropy is a function containing both the softmax activation and the negative log likelihood loss.

The tutorial is pointing out the log_softmax function doesn’t need to be called in the model, because it will be called in the newly added F.cross_entropy function instead.