I am very confused with this parameter in pytroch document. According to wiki

https://en.wikipedia.org/wiki/Bilinear_interpolation, the bilinear interpolation formula result is consistent with

align_corners =True. which is defatult before pytorch 0.4.0.

I want to know when should use align_corners=False ??

same problem here.

Someone can help?

Have you seen the note and examples under Upsample? I think they do a great job in explaining why.

Yeah, I check the example under Upsample. I don’t get it.

>>> input_3x3 = torch.zeros(3, 3).view(1, 1, 3, 3)

>>> input_3x3[:, :, :2, :2].copy_(input)

tensor([[[[ 1., 2.],

[ 3., 4.]]]])

>>> input_3x3

tensor([[[[ 1., 2., 0.],

[ 3., 4., 0.],

[ 0., 0., 0.]]]])

>>> m = nn.Upsample(scale_factor=2, mode='bilinear') # align_corners=False

>>> # Notice that values in top left corner are the same with the small input (except at boundary)

>>> m(input_3x3)

tensor([[[[ 1.0000, 1.2500, 1.7500, 1.5000, 0.5000, 0.0000],

[ 1.5000, 1.7500, 2.2500, 1.8750, 0.6250, 0.0000],

[ 2.5000, 2.7500, 3.2500, 2.6250, 0.8750, 0.0000],

[ 2.2500, 2.4375, 2.8125, 2.2500, 0.7500, 0.0000],

[ 0.7500, 0.8125, 0.9375, 0.7500, 0.2500, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]])

>>> m = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

>>> # Notice that values in top left corner are now changed

>>> m(input_3x3)

tensor([[[[ 1.0000, 1.4000, 1.8000, 1.6000, 0.8000, 0.0000],

[ 1.8000, 2.2000, 2.6000, 2.2400, 1.1200, 0.0000],

[ 2.6000, 3.0000, 3.4000, 2.8800, 1.4400, 0.0000],

[ 2.4000, 2.7200, 3.0400, 2.5600, 1.2800, 0.0000],

[ 1.2000, 1.3600, 1.5200, 1.2800, 0.6400, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]])

Whether align_corners is False or True, the left top corner is always 1.

Oh, my mistake. Now I get it.

I will show you a 1-dimension example.

Suppose that you want to resize tensor [0, 1] to [?, ?, ?, ?], so the factor=2.

Now we only care about coordinates.

For mode=‘bilinear’ and align_corners=False, the result is the same with opencv and other popular image processing libraries (I guess). Corresponding coordinates are [-0.25, 0.25, 0.75, 1.25] which are calculate by x_original = (x_upsamle + 0.5) / 2 - 0.5. Then you can these coordinates to interpolate.

For mode=‘bilinear’ and align_corners=True, corresponding coordinates are [0, 1/3, 2/3, 1]. From this, you can see why this is called align_corners=True.

I will be very happy if you find this answer useful.

Talk is cheap, show you the code!

# align_corners = False

# x_ori is the coordinate in original image

# x_up is the coordinate in the upsampled image

x_ori = (x_up + 0.5) / factor - 0.5

# align_corners = True

# h_ori is the height in original image

# h_up is the height in the upsampled image

stride = (h_ori - 1) / (h_up - 1)

x_ori_list = []

# append the first coordinate

x_ori_list.append(0)

for i in range(1, h_up - 1):

x_ori_list.append(0 + i * stride)

# append the last coordinate

x_ori_list.append(h_ori - 1)

I have the same doubt. The corners are the same, what is the difference?

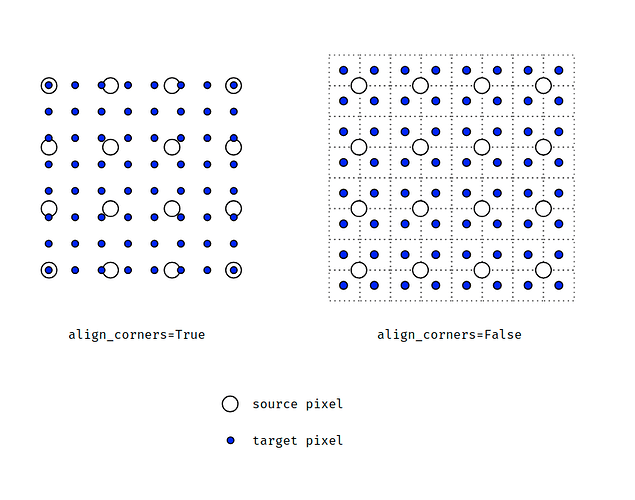

Here is a simple illustration I made showing how a 4x4 image is upsampled to 8x8.

When align_corners=True, pixels are regarded as a grid of points. Points at the corners are aligned.

When align_corners=False, pixels are regarded as 1x1 areas. Area boundaries, rather than their centers, are aligned.

Thanks for your image! Do you know in semantic segmentation task should we use align_corners=True or align_corners=False?

In semantic segmentation task should we use align_corners=True or align_corners=False ?

we should set true for better performance.

set align_corners=True

Hi,

Thank you for the nice picture. Can we add it to the kornia documentation for explaining the difference?

Sure. Feel free to use it.

Awesome pic thanks!

I had issue with my Unet not being equivariant to translations. Turns out align_corners=True was the culprit. As your figure clearly shows in the “True” case there is a shift between the input and output grids that depends on location. This add spatial bias in Unets. Your pic helped see this fast.

FYI the latest documentation is inconsistent about how bicubic works with align_corners: Upsample — PyTorch master documentation

For the align_corners argument documentation bicubic is not included after “This only has effect when mode is”. However in the warning section below that bicubic is mentioned and align_corners does seem to affect it when used:

import torch

import torch.nn.functional as F

import numpy as np

z = torch.from_numpy(np.array([

[1, 2],

[3, 4]

], dtype=np.float32)[np.newaxis, np.newaxis, ...])

print(F.interpolate(z, scale_factor=2, mode='bicubic', align_corners=True)[0, 0])

print(F.interpolate(z, scale_factor=2, mode='bicubic', align_corners=False)[0, 0])

tensor([[1.0000, 1.3148, 1.6852, 2.0000],

[1.6296, 1.9444, 2.3148, 2.6296],

[2.3704, 2.6852, 3.0556, 3.3704],

[3.0000, 3.3148, 3.6852, 4.0000]])

tensor([[0.6836, 1.0156, 1.5625, 1.8945],

[1.3477, 1.6797, 2.2266, 2.5586],

[2.4414, 2.7734, 3.3203, 3.6523],

[3.1055, 3.4375, 3.9844, 4.3164]])

So, you mean for the Unet we should set align_corners =False??

I guess they fix the docs - it seems to mention that align_corners can be set for all IP types except NN now?

Yes in my case that was the case if I wanted no bias based on location in the image.

Soren

In my local experiment, this seems to be true: set align_corners = True will generally get results faster for torch.nn.grid_sample comparing with setting align_corners = False.

But , i am little confused why is that , do you known any reason behind it ?