Tanh --> Xavier

ReLU --> He

So Pytorch uses He when its ReLU? Im confused what pytorch does.

Sorry ptrblck, Im confused…pytorch uses Xavier or He depending on the activation? Thats what klory seems to imply but the code looks as follows:

def reset_parameters(self):

stdv = 1. / math.sqrt(self.weight.size(1))

self.weight.data.uniform_(-stdv, stdv)

if self.bias is not None:

self.bias.data.uniform_(-stdv, stdv)Tanh → Xavier

ReLU → He

No that’s not correct, PyTorch’s initialization is based on the layer type, not the activation function (the layer doesn’t know about the activation upon weight initialization).

For the linear layer, this would be somewhat similar to He initialization, but not quite:

def reset_parameters(self):

stdv = 1. / math.sqrt(self.weight.size(1))

self.weight.data.uniform_(-stdv, stdv)

if self.bias is not None:

self.bias.data.uniform_(-stdv, stdv)

Ie., when I remember correctly, He init is “sqrt(6 / fan_in)” whereas in PyTorch Linear layer it’s “1. / sqrt(fan_in)”

Yeah, you’re correct, I just check their code for linear.py and conv.py, it seems they’re all using Xavier right? (I got the Xavier explanation from here)

are they all using Xavier?

it seems they’re all using Xavier right?

Doesn’t xavier also include fan_out though? Here, I can only see input channels, not output channels.

so is it just a unpublished made up pytorch init?

Maybe I am overlooking sth or don’t recognize it, but I think so

no, they are from respective well-established published papers actually. e.g., linear init is from “Efficient Backprop”, LeCun’99.

Thanks! I appreciate the it.

this one I assume:

Maybe it would be worthwhile adding comments to the docstrings? Would make it easier for the next person to find plus more convenient to refer to in papers.

For PyTorch 1.0, most layers are initialized using Kaiming Uniform method. Example layers include Linear, Conv2d, RNN etc. If you are using other layers, you should look up that layer on this doc. If it says weights are initialized using U(...) then its Kaiming Uniform method. This is in line with PyTorch’s philosophy of “reasonable defaults”.

If you want to override default initialization then see this answer.

A gentle remark, maybe it makes sense to add a reference to the default initialization modules implementation, in torch, on the documentation page of say Conv1d and other modules. Probably would make life much simpler.

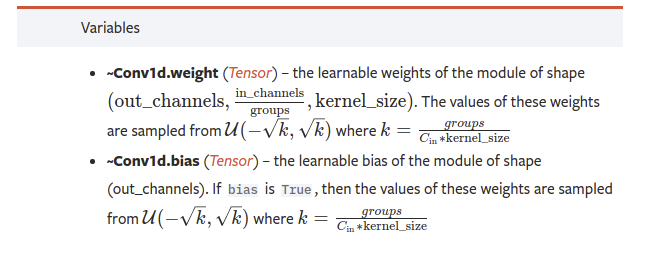

It is already mentioned in the variables section that explain what are the parameter shape and how they are initialized: Conv1d — PyTorch master documentation

Is that the information you were looking for? Or something else?

I mean the default initialization is done using He normal method. So it makes sense to add a hyper link to the specific section of the document present at torch.nn.init — PyTorch 2.1 documentation. This can be added at

This would make life easier since one could just jump to the hyperlink to check the function signature as well as implementation details.

Yes sure.

That doc preceedes the existence of nn.init IIRC so that would be the reason.

Can you can open an issue on github for that request?

Sure thing. I will set it up