How can I implement this snippet from keras that is used to generate image-morphs from a latent vector z by a VAE? (the main article is here):

# display a 2D manifold of the digits

n = 15 # figure with 15x15 digits

digit_size = 28

# linearly spaced coordinates on the unit square were transformed

# through the inverse CDF (ppf) of the Gaussian to produce values

# of the latent variables z, since the prior of the latent space

# is Gaussian

z1 = norm.ppf(np.linspace(0.01, 0.99, n))

z2 = norm.ppf(np.linspace(0.01, 0.99, n))

z_grid = np.dstack(np.meshgrid(z1, z2))

x_pred_grid = decoder.predict(z_grid.reshape(n*n, latent_dim)) \

.reshape(n, n, digit_size, digit_size)

plt.figure(figsize=(10, 10))

plt.imshow(np.block(list(map(list, x_pred_grid))), cmap='gray')

plt.show()

I came up with the following snippet, but the outcome is different!

n = 10 # figure with 10x10 digits

digit_size = 28

# linearly spaced coordinates on the unit square were transformed

# through the inverse CDF (ppf) of the Gaussian to produce values

# of the latent variables z, since the prior of the latent space

# is Gaussian

z1 = torch.linspace(0.01, 0.99, n)

z2 = torch.linspace(0.01, 0.99, n)

z_grid = np.dstack(np.meshgrid(z1, z2))

z_grid = torch.from_numpy(z_grid).to(device)

z_grid = z_grid.reshape(-1, embeddingsize)

x_pred_grid = model.decoder(z_grid)

x_pred_grid= x_pred_grid.cpu().detach().numpy().reshape(-1, 1, 28, 28).transpose(0,2,3,1)

plt.figure(figsize=(10, 10))

plt.imshow(np.block(list(map(list, x_pred_grid))), cmap='gray')

plt.show()

The problem that I have is that, first I dont know what the counter part for norm.ppf in Pytorch is, so I just ignored it for now. second, the way the line :

x_pred_grid = decoder.predict(z_grid.reshape(n*n, latent_dim)) \

.reshape(n, n, digit_size, digit_size)

reshapes the input is impossible for me! he is feeding the (nxn,latent_dim), which for n=10, and latent_dim =10, is (100,10) .

However, when I reshape like (nxn, latent_dim) I get the error :

RuntimeError : shape ‘[100, 10]’ is invalid for input of size 200

So I had to reshape like (-1, embeddingsize) and this I guess is why my output is different .

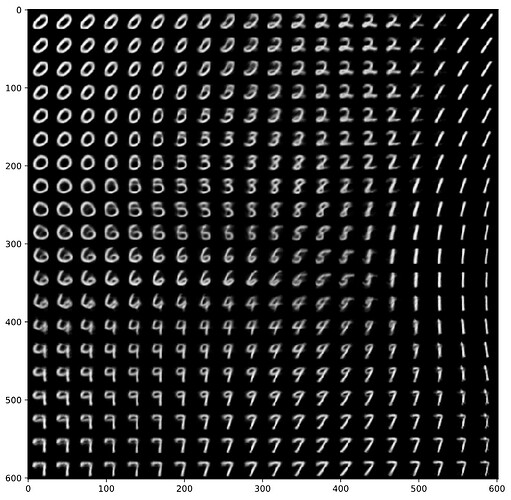

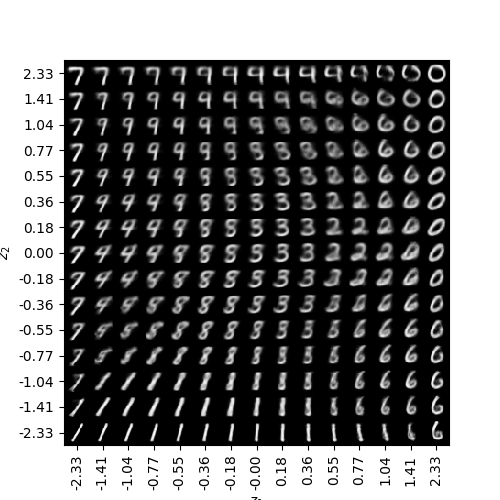

for the record the keras output is like this :

and mine is like this :

So how can I closely replicate this keras code in Pytorch? where am I going off road?

Thank you all in advance