Why terminal said that

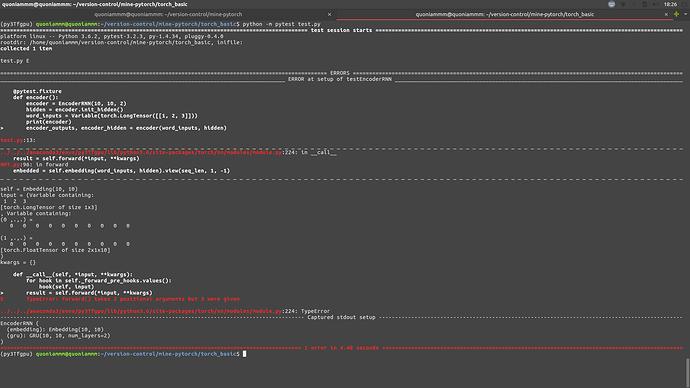

TypeError: forward() takes 2 positional arguments but 3 were given

I just past two params into encoder().

Can you help me?Thanks.

import torch

from torch.autograd import Variable

import pytest

from NMT import EncoderRNN

@pytest.fixture

def encoder():

encoder = EncoderRNN(10, 10, 2)

hidden = encoder.init_hidden()

word_inputs = Variable(torch.LongTensor([[1, 2, 3]]))

print(encoder)

encoder_outputs, encoder_hidden = encoder(word_inputs, hidden)

# def final_print():]

# print("测试结束.")

# request.addfinalizer(final_print)

return encoder, word_inputs, encoder_outputs, encoder_hidden

# test hidden output 和 init_hidden 的 size 和期望的一样

def testEncoderRNN(encoder):

assert encoder.input_size == 10, "input_size is not right"

#

#def testAttn(attn):

# pass

#

#def testDecoderRNN(decoder):

# pass

class EncoderRNN(nn.Module):

def __init__(self, input_size, hidden_size, n_layers=1):

super(EncoderRNN, self).__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.n_layers = n_layers

# self.iscuda = iscuda

self.embedding = nn.Embedding(input_size, hidden_size)

self.gru = nn.GRU(hidden_size, hidden_size, n_layers)

def forward(self, word_inputs, hidden):

seq_len = len(word_inputs)

embedded = self.embedding(word_inputs, hidden).view(seq_len, 1, -1)

output, hidden = self.gru()

return output, hidden

def init_hidden(self):

hidden = Variable(torch.zeros(self.n_layers, 1, self.hidden_size))

# if self.iscuda: hidden = hidden.cuda()

return hidden