Hello!

While training the model, I have some question about the training loss.

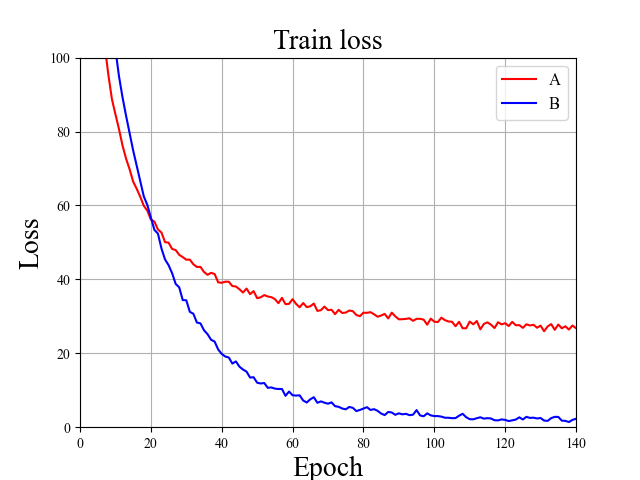

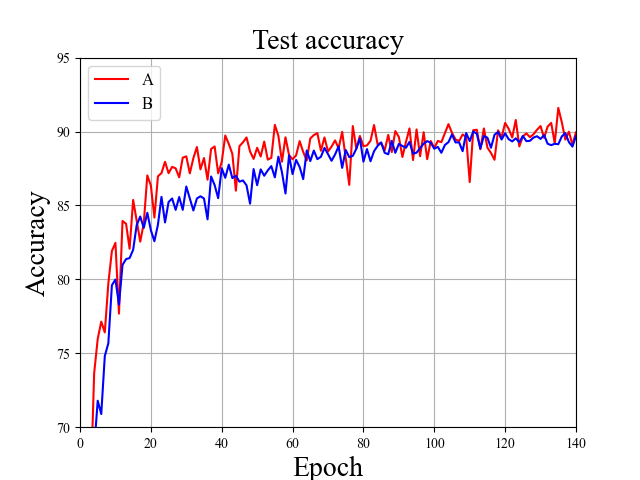

This plot is the result of training with different A and B optimizers.

The difference between the training loss values of A and B is large, but the test accuracy is almost the same, or A has higher accuracy.

If so, does the training loss have no significant relationship with the test accuracy?

In my opinion, from a traditional optimizer perspective, an optimizer that minimizes training loss is good.

What do you think about this issue?