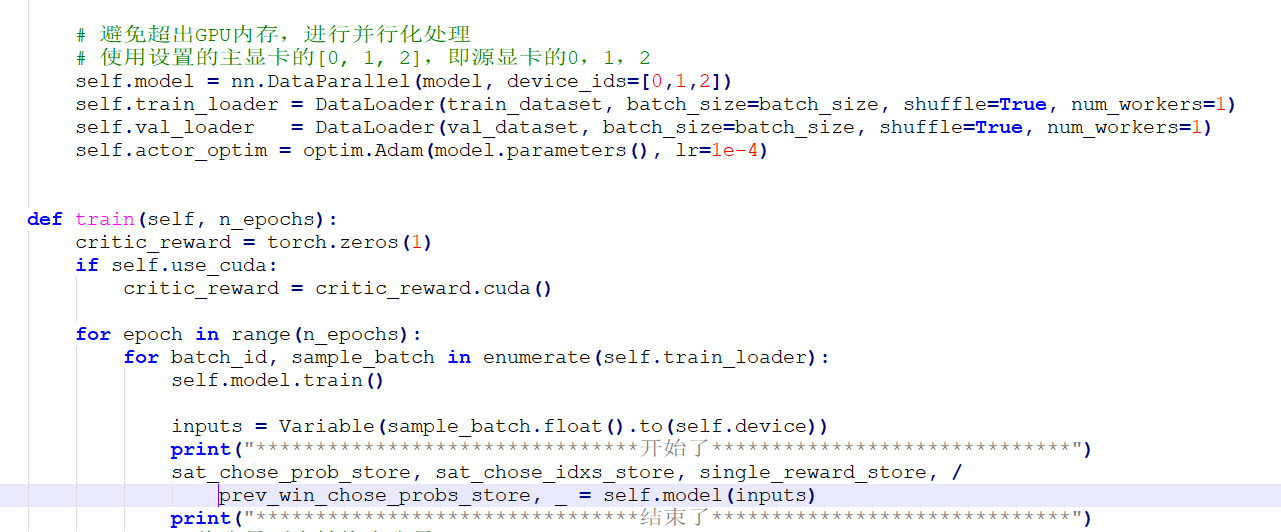

During model training, due to GPU memory overflow, only pytorch’s nn.DataParallel was used for setting, and a simple program was written to test. The result is feasible, but it is stuck in the model input part during real model training,that is “self.model(inputs)”.No error message, just stuck. Can you help me ? Thanks. The code is as follows:

1 Like

Which PyTorch version are you using?

If it’s an older one, could you update to the latest stable version (1.5)?

Also, is your model working on a single GPU?

1 Like

I also have same problem.

After reading @ptrblck 's reply, I updated pytorch as version 1.6.

But still it is not fixed.

I found that it was caused by deadlock of another part.

Now I fixed my problem.

Maybe you need to check other part too.