I have been working on a project that outputs monocular depth estimation from a single RGB image. By modifying open-source code on GitHub I manage to obtain results like the following.

[Image 1, see reply below, a new user can only post 1 embedded image in topic =.=‘’]

Note that the result above is a prediction made at resolution = 2560 x 1920 but the model is actually trained on images that are 640 x 480, hence the structural inconsistency shown in the image above. Currently, I want to reduce/eliminate such structural inconsistency from the image and I decided to add another loss into the existing loss function to do so. The existing loss function is:

class silog_loss(nn.Module):

def __init__(self, variance_focus):

super(silog_loss, self).__init__()

self.variance_focus = variance_focus

def forward(self, depth_est, depth_gt, mask):

d = torch.log(torch.abs(depth_est[mask])) - torch.log(depth_gt[mask])

return (torch.sqrt((d.clone() ** 2).mean() - self.variance_focus * (d.clone().mean() ** 2)) * 10.0)

which is a standard regression loss in log space. Note that depth_est, depth_gt are tensors of dimension (Batchsize, Channel, Width, Height) = (8, 1, 640, 480).

I added another multiscale loss into the regression loss above using the code below:

class MSG_loss(nn.Module):

def __init__(self):

super(MSG_loss, self).__init__()

def forward(self, depth_est, depth_gt, mask):

depth_est = depth_est * mask.to(torch.float)

depth_gt = depth_gt * mask.to(torch.float)

gradient_loss = imgrad_loss(depth_est, depth_gt)

depth_est = torch_nn_func.interpolate(depth_est, scale_factor=(0.5, 0.5), mode='nearest')

depth_gt = torch_nn_func.interpolate(depth_gt, scale_factor=(0.5, 0.5), mode='nearest')

gradient_loss2 = imgrad_loss(depth_est, depth_gt)

depth_est = torch_nn_func.interpolate(depth_est, scale_factor=(0.5, 0.5), mode='nearest')

depth_gt = torch_nn_func.interpolate(depth_gt, scale_factor=(0.5, 0.5), mode='nearest')

gradient_loss3 = imgrad_loss(depth_est, depth_gt)

depth_est = torch_nn_func.interpolate(depth_est, scale_factor=(0.5, 0.5), mode='nearest')

depth_gt = torch_nn_func.interpolate(depth_gt, scale_factor=(0.5, 0.5), mode='nearest')

gradient_loss4 = imgrad_loss(depth_est, depth_gt)

depth_est = torch_nn_func.interpolate(depth_est, scale_factor=(0.5, 0.5), mode='nearest')

depth_gt = torch_nn_func.interpolate(depth_gt, scale_factor=(0.5, 0.5), mode='nearest')

gradient_loss5 = imgrad_loss(depth_est, depth_gt)

return (0.005 * (gradient_loss + gradient_loss2 + gradient_loss3 + gradient_loss4 + gradient_loss5))

def imgrad_loss(pred, gt):

grad_y, grad_x = imgrad(pred)

grad_y_gt, grad_x_gt = imgrad(gt)

grad_y_diff = torch.abs(grad_y - grad_y_gt)

grad_x_diff = torch.abs(grad_x - grad_x_gt)

# if mask is not None:

# grad_y_diff = grad_y_diff[mask]

# grad_x_diff = grad_x_diff[mask]

return (torch.mean(grad_y_diff) + torch.mean(grad_x_diff))

def imgrad(img):

img = torch.mean(img, 1, True)

fx = np.array([[1,0,-1],[2,0,-2],[1,0,-1]])

convolution1 = nn.Conv2d(1, 1, kernel_size=3, stride=1, padding=1, bias=False)

weight = torch.from_numpy(fx).float().unsqueeze(0).unsqueeze(0)

if img.is_cuda:

weight = weight.cuda()

convolution1.weight = nn.Parameter(weight)

grad_x = convolution1(img)

fy = np.array([[1,2,1],[0,0,0],[-1,-2,-1]])

convolution2 = nn.Conv2d(1, 1, kernel_size=3, stride=1, padding=1, bias=False)

weight = torch.from_numpy(fy).float().unsqueeze(0).unsqueeze(0)

if img.is_cuda:

weight = weight.cuda()

convolution2.weight = nn.Parameter(weight)

grad_y = convolution2(img)

return grad_y, grad_x

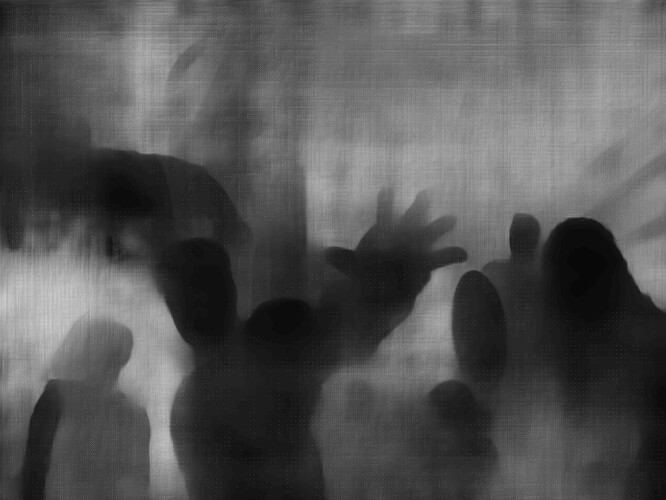

As shown from the code, I multiplied the multi-scale gradient loss with 0.005 and the prediction result returns a depth map with several white noises as follow (not very obvious, will have to zoom in to see the white dots)

[Image 2, see reply below, a new user can only post 1 embedded image in topic =.=‘’]

When the multi-scale gradient loss is multiplied with 0.1, it yields results like the following:

To summarize, when the proportion of the multi-scale gradient is high, the output of the model gives a nice prediction with clear edges and better structural consistency but with several white lines in the image (The loss is working? it does have a positive impact on the model).

Reducing the proportion of the multi-scale gradient reduces the white lines but white dots appear and the improvement in structural consistency is minimal (Reducing the negative impact of the loss but the positive impact is gone in the meantime as well).

I am continuing the training while further lowering the proportion of the multi-scale gradient loss but I doubt it will have any improvement in my model when the white dots are fully eliminated. I am stuck here right now and any suggestions/advice is greatly appreciated!!