I’d like to know the time ratio of each module during the pre-training process of language models, such as the proportion of Attention. How much impact does it have on the total training time? GPT-4 and LLama2-70B provided me with answers (as shown in the picture). Who should I believe, and is there any professional documentation explaining this?

Profile your model and check the real timeline on your system and setup.

I may not be able to conduct the experiment due to resource issues, so if there are documentation and links that can help me, I would be extremely grateful !!!

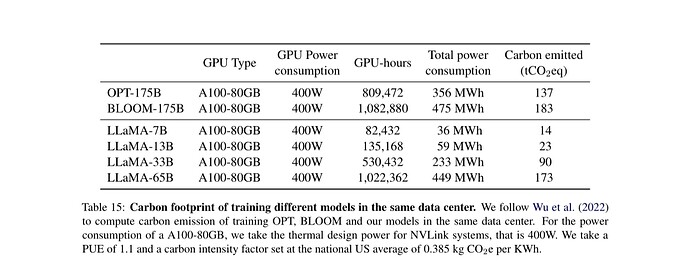

Most open source LLMs have also published a paper about it. In most cases, the paper describes the resources and training time involved. For example, Llama:

With very limited resources, even just CPU ram, you could create a very small version of the model in question, say with only 1 transformer layer, a small embedding layer, perhaps 10,000 tokens wide and 128 elements deep, and then set the model to 4 heads and 128 dim feedforward, and use a loop with timeit or torch.utils.benchmark.Timer. This should run relatively quickly.

You can then time test each part of the model and calculate the percentage of time the embedding layer, attention layer, etc. takes from there and extrapolate those values.

Of course, GPUs will be much faster and may vary some in how they approach various matrix operations vs. CPU on the backend. Additionally, various GPUs will vary in processing time, depending on a host of factors, including dtype used, model size, number of cores, throughput, etc.

Thank you for your response. I appreciate your feedback.