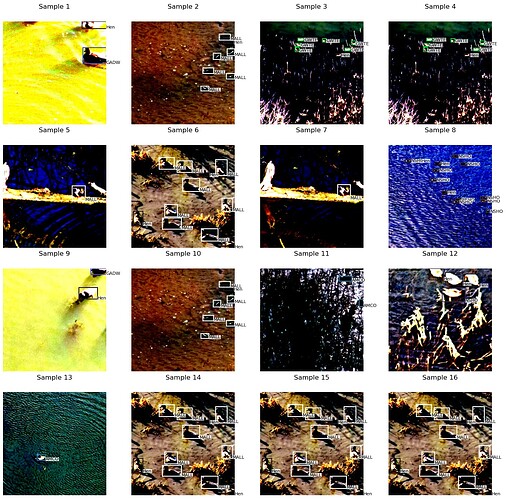

I’m fairly new to PyTorch, so maybe the answer will be obvious to someone else. I’ve got a custom dataset for object detection that returns batches of images and their objects (dictionaries containing boxes, labels, image_id, area, iscrowd). However, when I instantiate and load the custom dataset (see code below), duplicate samples (with the exact same transformations applied) show up in a batch. I’ve included a figure at the bottom showing the duplicate samples in batch.

Can anyone tell me what I’m doing wrong?

Custom transforms applied in custom dataset’s __getitem__():

def transforms(image, boxes, labels, split):

"""

Apply the transformations above.

:param image: image, an ndarray

:param boxes: boxes in boundary coordinates, a tensor of dimensions (n_objects, 4)

:param labels: labels of targets, a tensor of dimensions (n_objects)

:param split: one of 'TRAIN' or 'TEST', since different sets of transformations are applied

:return: transformed image, transformed boxes, transformed labels

"""

np.random.seed(666)

if split == 'TRAIN':

new_image, new_boxes = Rescale(image, boxes,

output_size = (400))

new_image, new_boxes, new_labels = RandomCrop(new_image, new_boxes, labels, output_size = (300))

new_image, new_boxes = RandomHorizontalFlip(new_image, new_boxes)

else:

new_image, new_boxes = Rescale(image, boxes,

output_size = (300))

new_image = image_to_tensor(new_image)

new_image = FT.normalize(new_image, mean, std)

return new_image, new_boxes, new_labels

Custom Dataset :

class Dataset(Dataset):

"""Dataset"""

def __init__(self, csv_file, root_dir, split):

"""

Arguments:

csv_file (string): Path to the CSV file with annotations. (image name, xmin, ymin, xmax, ymax, label)

root_dir (string): Directory containing all images with annotations.

split (string): Train or test, determines which transforms are applied during loading.

"""

self.df = pd.read_csv(csv_file)

self.root_dir = root_dir

self.split = split.upper()

assert self.split in {'TRAIN', 'TEST'}

def __getitem__(self, idx):

if torch.is_tensor(idx):

idx = idx.tolist()

image_path = os.path.join(self.root_dir, self.df.iloc[idx, 0])

image = io.imread(image_path)

boxes = self.df[self.df['img_name'] == self.df.iloc[idx, 0]][['xmin', 'ymin', 'xmax', 'ymax']].values # why are xmin/xmax switched during loading?

labels = self.df[self.df['img_name'] == self.df.iloc[idx, 0]]['label'].values

labels = torch.LongTensor(labels) # (n_objects)

image, boxes, labels = transforms(image, boxes, labels, split = self.split)

boxes = torch.FloatTensor(boxes).reshape(-1, 4) # (n_objects, [xmin, ymin, xmax, ymax])

target = {'boxes': boxes, 'labels': labels, 'image_id': self.df.iloc[idx, 0],

'area': (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0]),

'iscrowd': torch.zeros((len(labels,)), dtype=torch.int64)}

return image, target

def __len__(self):

return len(self.df['img_name'].unique())

def collate_fn(self, batch):

"""

Since each image may have a different number of objects, we need a collate function (to be passed to the DataLoader).

This describes how to combine these tensors of different sizes. Use lists.

:param batch: an iterable of N sets from __getitem__()

:return: a tensor of images, dictionary containing lists of varying-size tensors of polygons and targets

"""

# collate function to combine images with varying numbers of objects into batches

images = list()

boxes = list()

labels = list()

image_ids = list()

areas = list()

iscrowds = list()

for b in batch:

images.append(b[0])

boxes.append(b[1]['boxes'])

labels.append(b[1]['labels'])

image_ids.append(b[1]['image_id'])

areas.append(b[1]['area'])

iscrowds.append(b[1]['iscrowd'])

images = torch.stack(images, dim=0)

targets = {'boxes': boxes, 'labels': labels, 'image_ids': image_ids, 'areas': areas, 'iscrowds': iscrowds}

return images, targets # tensor (N, 3, 300, 300), dictionary containing 2 lists of N tensors each

Training dataset:

train_dataset = Dataset(csv_file = '/train/label.csv',

root_dir = '/train/images/',

split = 'TRAIN')

DataLoader using custom collate function

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size = 16, shuffle = True,

collate_fn = train_dataset.collate_fn, num_workers = 0)

Sample batch images and targets from data loader:

images, targets = next(iter(train_loader))

Plotting images, targets from sample batch:

plt.figure(figsize = (16, 64))

for i in range(batch_size):

ax = plt.subplot(16, 4, 1 + i)

plot_detections(images[i], targets['boxes'][i], targets['labels'][i], ax = ax)

plt.axis('off')

plt.title(f"Sample {i + 1}")

plt.show()

Output: