This is a follow up question from this thread. Basically I shared weights between two layers. but the problem is, when I visualize the weights, they are not exactly the same, one of them is a bit off compared to the other. (looks washed out if you will)

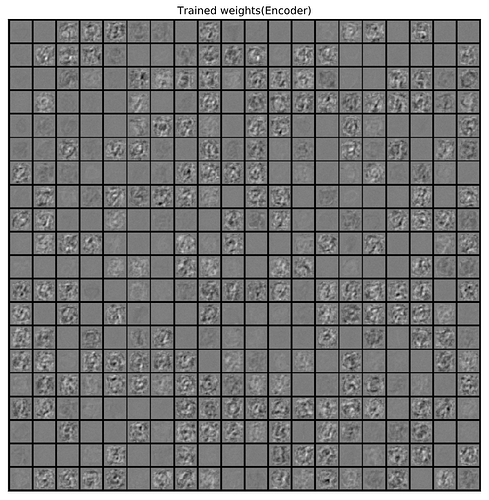

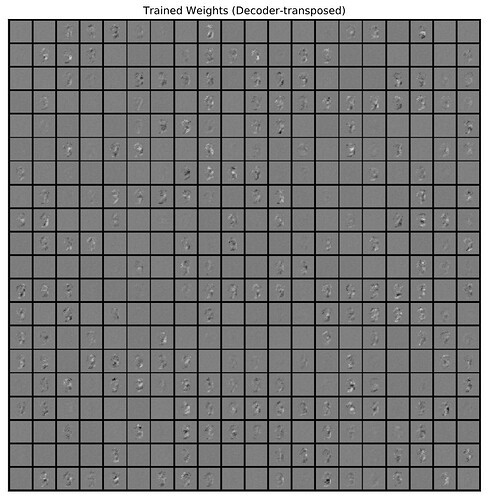

here is the samples I’m talking about :

As you can see, they are nearly identical, expect for the fact that the decoder’s weight seem washed out (indicates more values are either 0 or very close to 0, compared to the encoders weight.)

However, knowing that both encoder and decoder share the same weight, why am I seeing this?

(I trained this on a sparse autoencoder by the way. and the weights are shared like this :

weights = nn.Parameter(torch.randn_like(self.encoder[0].weight))

self.encoder[0].weight.data = weights.clone()

self.decoder[0].weight.data = self.encoder[0].weight.data.t()

What is the reason behind this behavior?

I’d be very grateful to know