I am a new learner and I am now learning vgg16.

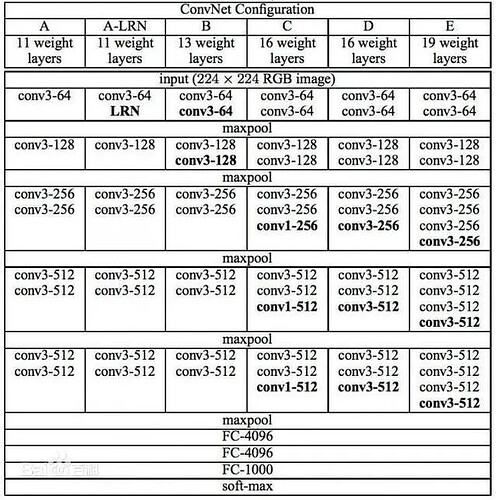

From the following image, vgg only calls maxpooling before FC-4096:

However, from its source code, a average pooling is called:

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

so which step corresponds to this self.avgpool(x) ?

I appreciate that you solve my question. Thank you!

The nn.AdaptiveAvgPool2d((7, 7)) is used as a convenient addition to allow the usage of variable input shapes since this layer will output the pre-defined activation shape of [batch_size, nb_channels, 7, 7], which is the default activation shape when images in the shape [batch_size, 3, 224, 224] are used.

So from my perspective, the original vgg(Simonyan & Zisserman, 2014) does not have this AdaptiveAvgPool2d operation, and it is later introduced for Reduce Overfitting as well as Flexibility?

No, it’s unrelated to overfitting and I’m unsure where this impression comes from. If the original shapes are used, the layer is a no-op since the activation shape has the desired output shape already. However, if the input shapes (and thus the intermediate activation shapes) change, you would receive a RuntimeError previously while the adaptive pooling layer allows you to execute the model.

You can certainly remove the layer and would receive errors for invalid shapes, if you want to stick to the original implementation.