I met a strange problem that the same code executed on 3080 is much slower than on 2080.

Detailed environments on 3080:

CUDA 11.1

Pytorch 1.8

learn2learn 0.1.5

Detailed environments on 2080:

CUDA 10.2

Pytorch 1.1

learn2learn 0.1.3

My code clip can be summarized as:

optimizer.zero_grad()

learner = model.clone()

loss1 = torch.nn.MSE(learner(x), y)

learner.adapt(loss1)

loss2 = torch.nn.MSE(learner(x), y)

loss2.backward()

optimizer.step()

And I found most of the time is spent on the loss2.backward() step. Can anyone provide hints about this problem? Maybe the reason is pytorch 1.8 has changed some features related to clone()?

Some snapthot is avaiable on this site

Your 3080 might see a performance regression due to an older cudnn version (you would be using 8.0.5) and/or due to this issue.

While we are still working on fixing both issues (first the static linking then updating cudnn, if no major regressions are found), you could build from source using the latest cudnn (and CUDA) version and compare the performance.

Thanks for your quick reply!

The version of installed pytorch is py3.6_cuda11.1_cudnn8.0.5_0 . Should I upgrade the cudnn version?

You could update cudnn, but would need to build PyTorch from source as described here.

Hi @ptrblck , I built pytorch from source using CUDA11.1 and cudnn8.1.1. But the problem still exists.

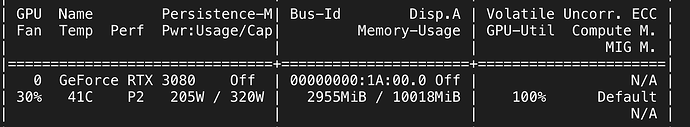

I find that when the code is executed on 3080, GPU-Util is 100%. However, it is 20% when the code is executed on 2080.

The snapshot (on 3080):

The bug is located in the following code of this function. However, I cannot dig it out…

# Then, recurse for each submodule

for module_key in module._modules:

module._modules[module_key] = update_module(

module._modules[module_key],

updates=None,

memo=memo,

)

I’m not sure I understand the last issue. Are you still seeing a slowdown on the 3080 compared to the 2080?

If so, could you post a code snippet, which you’ve used to profile the workload (and which we could execute to reproduce it using random inputs)?

Do you think the method from your last post is causing a high GPU utilization?

yes, it’s still slower on the 3080.

Sorry, the project replies on complex environment, It’s not easy to post online.

Yes. At first I thought it was an iteration problem, but I found that the most time-consuming step was to update the parameters (Line 112- Line 122).

Hi @ptrblck , here is the cleaner code to reproduce the error. I found if I delete code related to self.register_buffer, it works fine.

import torch

import torch.nn as nn

from tqdm import tqdm

import learn2learn as l2l

import torchvision.models.resnet as resnet

def set_dropout_eval(m):

classname = m.__class__.__name__

if classname.find('Dropout') != -1:

m.eval()

def freeze_dropout(model):

model.apply(set_dropout_eval)

return model

class Model(nn.Module):

def __init__(self,):

super(Model, self).__init__()

self.model = resnet.resnet50(pretrained=True)

init_pose = torch.randn(1, 3,256,256)

self.register_buffer('init_pose', init_pose)

self.register_buffer('init_pose2', init_pose)

self.register_buffer('init_pose3', init_pose)

def forward(self, x):

batch_size = x.shape[0]

init_pose = self.init_pose.expand(batch_size, -1, -1, -1)

init_pose2 = self.init_pose2.expand(batch_size, -1, -1, -1)

init_pose3 = self.init_pose3.expand(batch_size, -1, -1, -1)

x = x + init_pose

x = x + init_pose2

x = x + init_pose3

out = self.model(x)

return out

device = torch.device('cuda')

model = Model()

model = l2l.algorithms.MAML(model, lr=0.001, first_order=False).to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.00001)

X = torch.randn(16,3,256,256).to(device)

Y = torch.randn(16,1000).to(device)

criterion = nn.MSELoss().to(device)

# traning

for i in tqdm(range(10000), total=10000):

model.train()

model = freeze_dropout(model)

X = torch.randn(16,3,256,256).to(device)

Y = torch.randn(16,1000).to(device)

learner = model.clone()

optimizer.zero_grad()

# inner update

loss1 = criterion(learner(X), Y)

learner.adapt(loss1)

# outer update

loss2 = criterion(learner(X), Y)

loss2.backward()

optimizer.step()

I’m still unsure, how you are profiling the code, but note that CUDA operations are executed asynchronously, so you would have to synchronize the code via torch.cuda.synchronize() before starting and stopping the timers.

To profile certain operations, you could use the torch.utils.benchmark utilities or have a look at this post to profile the code with Nsight Systems to isolate the bottlenecks.

Thanks! Here is the revised code.

import torch

import torch.nn as nn

from tqdm import tqdm

import learn2learn as l2l

import torchvision.models.resnet as resnet

def set_dropout_eval(m):

classname = m.__class__.__name__

if classname.find('Dropout') != -1:

m.eval()

def freeze_dropout(model):

model.apply(set_dropout_eval)

return model

class Model(nn.Module):

def __init__(self,):

super(Model, self).__init__()

self.model = resnet.resnet50(pretrained=True)

self.init_pose = torch.randn(1, 3,256,256).cuda()

self.register_buffer('init_pose', init_pose)

self.register_buffer('init_pose2', init_pose)

self.register_buffer('init_pose3', init_pose)

def forward(self, x):

batch_size = x.shape[0]

init_pose = self.init_pose.expand(batch_size, -1, -1, -1)

init_pose2 = self.init_pose2.expand(batch_size, -1, -1, -1)

init_pose3 = self.init_pose3.expand(batch_size, -1, -1, -1)

x = x + init_pose

x = x + init_pose2

x = x + init_pose3

out = self.model(x)

return out

device = torch.device('cuda')

# model = Model()

model = resnet.resnet50(pretrained=True)

model = l2l.algorithms.MAML(model, lr=0.001, first_order=False).to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.00001)

X = torch.randn(16,3,256,256).to(device)

Y = torch.randn(16,1000).to(device)

criterion = nn.MSELoss().to(device)

warmup_iters = 10

nb_iters = 20

# traning

torch.cuda.synchronize()

for i in tqdm(range(200), total=200):

model.train()

model = freeze_dropout(model)

X = torch.randn(16,3,256,256).to(device)

Y = torch.randn(16,1000).to(device)

learner = model.clone()

optimizer.zero_grad()

# start profiling after 10 warmup iterations

if i == warmup_iters: torch.cuda.cudart().cudaProfilerStart()

# push range for current iteration

if i >= warmup_iters: torch.cuda.nvtx.range_push("iteration{}".format(i))

# push range for forward

if i >= warmup_iters: torch.cuda.nvtx.range_push("forward")

# inner update

loss1 = criterion(learner(X), Y)

learner.adapt(loss1)

# outer update

loss2 = criterion(learner(X), Y)

if i >= warmup_iters: torch.cuda.nvtx.range_pop()

if i >= warmup_iters: torch.cuda.nvtx.range_push("backward")

loss2.backward()

if i >= warmup_iters: torch.cuda.nvtx.range_pop()

if i >= warmup_iters: torch.cuda.nvtx.range_push("opt.step()")

optimizer.step()

if i >= warmup_iters: torch.cuda.nvtx.range_pop()

torch.cuda.synchronize()

torch.cuda.cudart().cudaProfilerStop()

@ptrblck, would you help me to figure it out?

Sure! What is the new code snippet showing for each operation on both setups?