Only b.to(device) can move b to GPU:1?

Only b.to(device) can move b to GPU:1?

if u have 4 graphic cards,u can choose which one to use.

By deault it will be cuda:0

I have two,so is there a way to change the default gpu?

you can try this

torch.cuda.set_device(1)

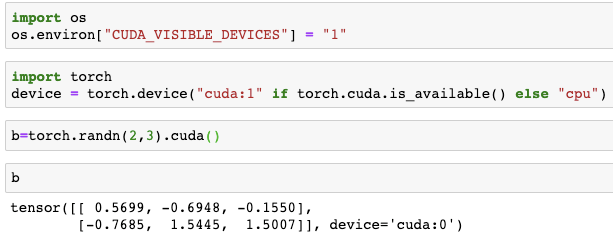

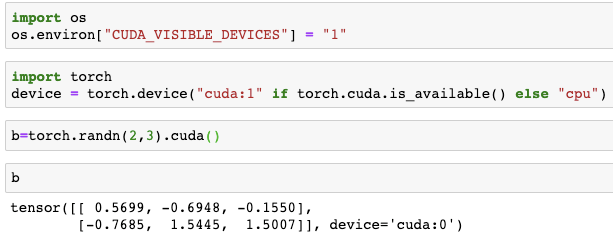

It’s easier to use the flag CUDA_VISIBLE_DEVICES=‘1’ rather than coding using set_device.

If you need that it means you are working with a single gpu.

You can call .cuda(idx) which allocates in the gpu you want if you use several gpus.

CUDA_VISIBLE_DEVICES=“1” exposes only 1 GPU for the program. However, when torch uses the GPUS it starts ordering from 0. So GPU 1 on system is mapped to GPU 0 in the program.

Your advice saved me a lot of time and energy ![]() Thanks, man! I used the command ‘set CUDA_VISIBLE_DEVICES=1’ before running the code to specify the GPU.

Thanks, man! I used the command ‘set CUDA_VISIBLE_DEVICES=1’ before running the code to specify the GPU.