I have a test exercise of Alexnet on cifar10 with the standard torch Dataloader and dataset. It seemed to optimize at 12-14 sec per epoch by loading the dataset into a RAM disk, pin_memory=True, and num_workers=8. nvidia-smi showed very little GPU downtime and was pretty much pegged at to 90-100% utilization while running.

That was Python 3.11 with the NVIDIA 535 drivers using an rtx 3090ti with OS=22.04 Ubuntu, Pytorch 1.12.1.post201 and Torchvision 0.13.0a0+8069656.

Under Python 3.12 and NVIDIA 560 drivers, Ptorch 2.6.0+cu124 and Torchvision 0.21.0+cu124, the same hardware and OS, I ran the same program and it is taking 49 sec per epoch. That’s a 3x increase! nvidia-smi shows the GPU mostly idling.

I am not sure what changed in Python between 3.11 and 3.12, the NVIDIA driver or whatever else, but I cannot seem to get back to 12-14 sec per epoch.

I lowered the num_workers from 8 to 2 and got down to 27 sec per epoch. But that is still a 2x increase from 14 sec. The GPU is still mostly idling.

I do notice a difference in the loading of the data.

For the first call (upon entering the loop) of

“for i, (images, labels) in enumerate(train_loader):”

For num_workers=8 takes 18 seconds, 0.02 sec for the rest.

For num_workers=2 takes 5 seconds, 0.01 for the rest.

Same for the validation loop.

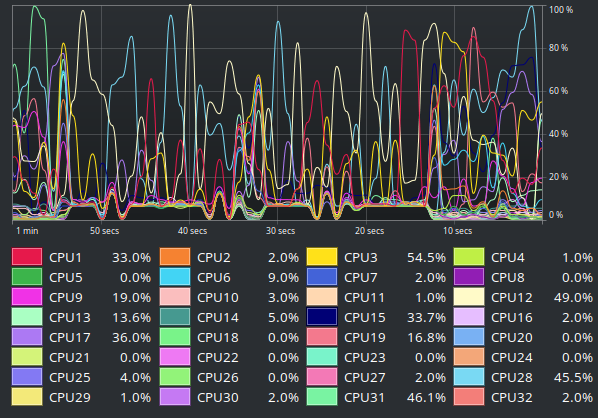

The only other thing I can think of is that the CPU utilization shows a large amount of time with most of the 32 threads being used at 5-7% for 18 seconds at a time corresponding approximately to the first call in the loops. That and the cache memory goes up, and then goes back down after the epoch is trained.

Might anyone know what changed to cause such a drastic performance reducton? And, perhaps, how to get that performance back?