Hi hope you are well.

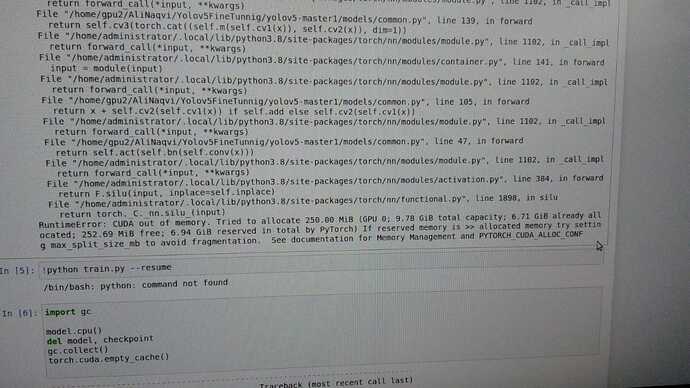

I’m also getting a similar kind of error.

First I finetuned Yolov5 on custom data.

After obtaining best I tried to refine model on those best weights but with different image size.

but now i can’t run model even with small batch size.

And I can’t run any other mode like detection transformer on machine

I tried to emtpy cache as suggested but all in vain

I tried to run code on different machine with same GPU RTX 3080 and and Vram 10GB

Any idea how can i fix this error.