Hi, recently I used the DDPG agent based on PyTorch to build a project.

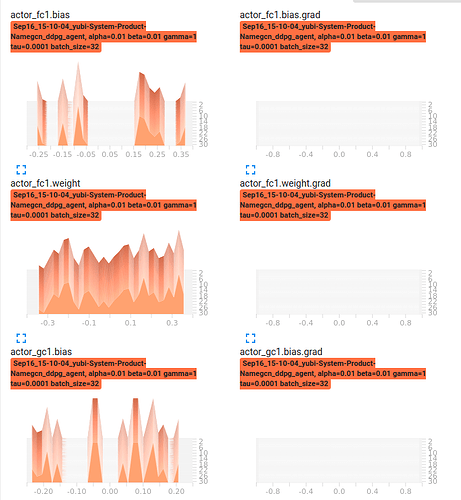

When I was trying to read a batch of combinations of (state, action, reward, next_state) from the buffer and to update the weights of the 4 networks, it seemed loss.backward() function didn’t update the grads at all! There is just no data shown on the tensorboard page.

Here is the code for “update based on the buffer” part:

def learn(self, adj):

if self.buffer.mem_cntr < self.batch_size:

return

state_vectors, action_vectors, rewards, next_state_vectors = \

self.buffer.sample_buffer(self.batch_size)

adj = T.tensor(adj, dtype = T.float32).to(self.actor.device)

for i in range(self.batch_size):

state_vector = T.tensor(state_vectors[i], dtype = T.float32).unsqueeze(0).to(self.actor.device)

next_state_vector = T.tensor(next_state_vectors[i], dtype = T.float32).unsqueeze(0).to(self.actor.device)

action_vector = T.tensor(action_vectors[i], dtype = T.float32).unsqueeze(0).to(self.actor.device)

reward = T.tensor(rewards[i], dtype = T.float32).to(self.actor.device)

next_target_action_vector = self.target_actor.forward(next_state_vector, adj)

next_target_q = self.target_critic(next_state_vector, next_target_action_vector, adj)

q = self.critic.forward(state_vector, action_vector, adj)

target_q = reward + self.gamma * next_target_q

self.critic.optimizer.zero_grad()

critic_loss = F.mse_loss(q, target_q)

critic_loss.backward()

self.critic.optimizer.step()

self.actor.optimizer.zero_grad()

actor_loss = -self.critic.forward(state_vector,

self.actor.forward(state_vector, adj),

adj) / self.batch_size

actor_loss.backward()

self.actor.optimizer.step()

self.soft_update()

How to modify the codes to make the actor-network work correctly?