I have access to multiple GPUs. I wanted to use them. However, I was reading the tutorial and saw that it was not explained why one needed the device id. It was really weird to me because I would have thought that the whole point was that pytorch handles that for you and just uses the GPUs for you without you having to worry about it…thus my question is, why does it need to give the device to the model if one is using DataParallel and how to use that so that it does it properly and uses the GPUS available.

What confuses me is the following line in the tutorial:

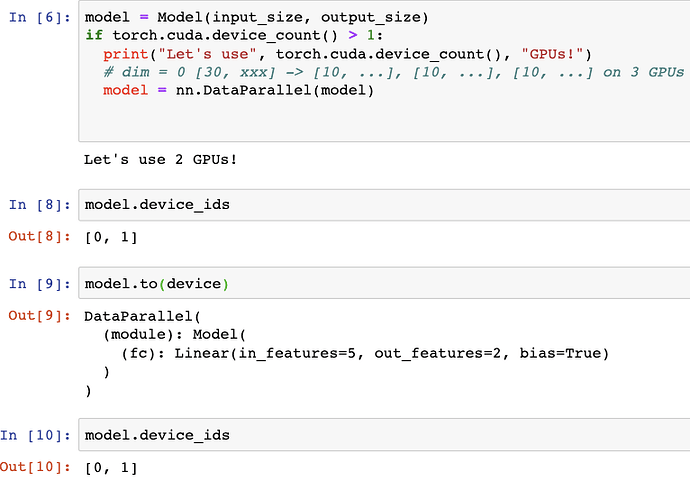

model = Model(input_size, output_size)

if torch.cuda.device_count() > 1:

print("Let's use", torch.cuda.device_count(), "GPUs!")

# dim = 0 [30, xxx] -> [10, ...], [10, ...], [10, ...] on 3 GPUs

model = nn.DataParallel(model)

model.to(device) ## <- DO WE REALLT NEED THIS?