Even for a single input channel(like a grayscale image), we try to have many output channels before feeding it into the fully connected layer.

I can’t understand why this is done?

The number of output channels defines the number of kernels, which convolve over your input volume.

So even if your input image is a grayscale image (1 channel), a lot of different kernels can learn different things.

Have a look at Stanford’s CS231n course.

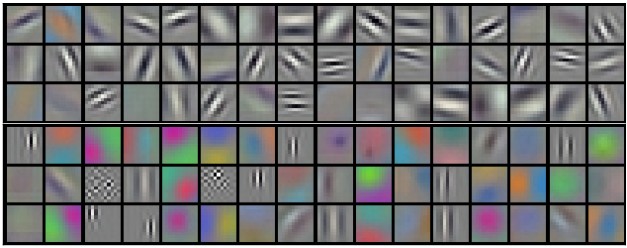

Here is an example of 96 learned kernels for a color image by Krizhevsky et al.:

As you can see, the kernels learn a lot of different angles for edges etc.

Higher layers learn therefore more “complex” features based on the activations from previous layers.

I hope that clears thing up a bit.

2 Likes