pytorch 1.0

relevant source code: https://github.com/heronsystems/adeptRL/blob/master/adept/environments/managers/subproc_env_manager.py#L148-L193

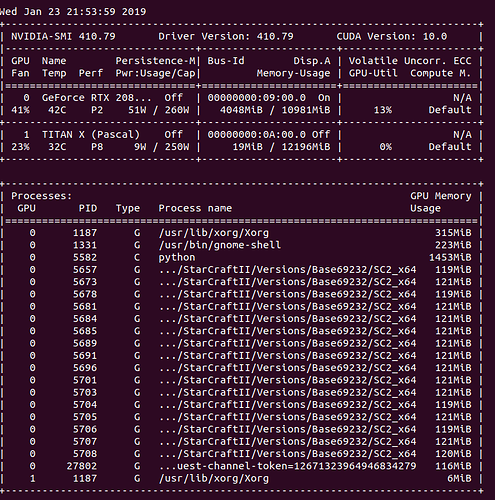

even if I set os.environ[“CUDA_VISIBLE_DEVICES”] = “”, torch.cuda.is_available() returns False, but memory is still being allocated on GPU? maybe something to do with shared memory?