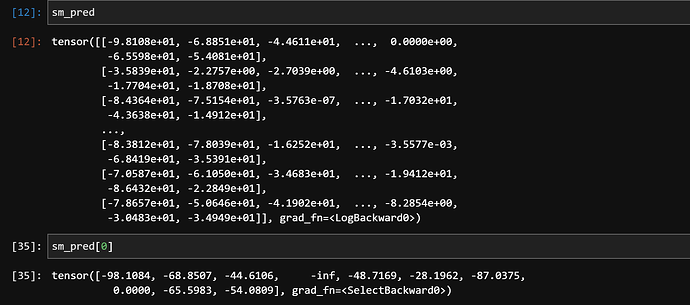

in the above image, the top cell is showing my softmax predictions, but when i index into it (to get the preds for the first input) i am not getting the exact same numbers (as shown in the cell below).

i want to use integer array indexing for calculating the neg. log likelihood but since the preds are not same, the NLL is coming ‘inf’.

The grad_fn changes since indexing is a differentiable operation.

You are getting the same values, but the printing uses another format (e.g. -98.1084 instead of -9.8108e+01) since the range of the slice is smaller and wouldn’t benefit from the “scientific” format.

That’s not true and the -inf value is also in the original sm_pred tensor but just not displayed.

1 Like

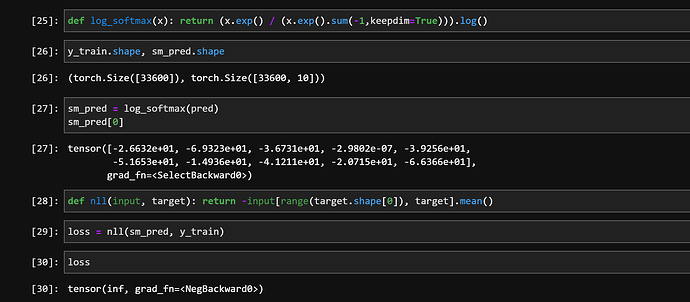

i am re-implementing a notebook from 2019…this is the

log_softmax function and the

NLL function… i am just using the exact same code from the original notebook but still my

preds are not the same… has any functionality used in these functions changed during these years???

I’m sure a lot of things were moved around between 2019 and now, but I don’t know the exact changes in your used operations.

However, your current manual log_softmax implementation is numerically not stable and I would recommend to use F.log_softmax which might be able to avoid the invalid outputs assuming the input does not contain any invalid values (it did before).

Yeah i understand. But i am implementing it from scratch just to deepen my understanding of the pytorch library. At the end, i use the F.log_softmax function for all my projects.

I understand the idea, but in this case it would be your responsibility to check the numerical stability as your current implementation can create invalid outputs more easily:

def log_softmax(x):

return (x.exp() / (x.exp().sum(-1,keepdim=True))).log()

x = torch.tensor([[-100., 100.]])

out = log_softmax(x)

print(out)

# tensor([[-inf, nan]])

out = F.log_softmax(x, dim=1)

print(out)

# tensor([[-200., 0.]])