I am trying for an image classifier. But always the model is overfitting. Here are my codes:

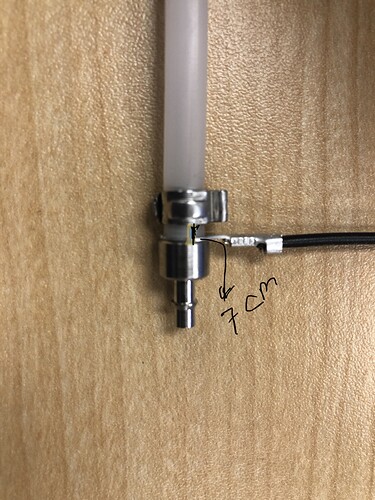

**GOAL : Defect classifier.

Any parts with distance less or grater than 7 cm is defected.Number of images I used: Perfect # 405 and Defected # 385.

Here are my codes:

resnet18 = torchvision.models.resnet18(pretrained=True)

resnet18.cuda()

num_classes = 2

import torch.nn as nn

for name, param in resnet18.named_parameters():

if("bn" not in name):

param.requires_grad = False

resnet18.fc = torch.nn.Sequential(nn.Linear(resnet18.fc.in_features,512),

nn.ReLU(),

nn.Dropout(),

nn.Linear(512, num_classes))

#num_ftrs = resnet18.fc.in_features

#resnet18.fc = torch.nn.Linear(num_ftrs, out_features=1)

#loss_fn =torch.nn.crose()

optimizer = torch.optim.SGD(resnet18.parameters(), lr=0.001)

def train(model, optimizer, loss_fn, train_loader, val_loader, epochs=20):

for epoch in range(epochs):

training_loss = 0.0

valid_loss = 0.0

model.train()

for batch in train_loader:

optimizer.zero_grad()

inputs, targets = batch

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

inputs, targets = inputs.to(device), targets.to(device)

#inputs = inputs.to(device)

#targets = targets.to(device)

output = model(inputs)

#outputs = outputs.float()

#targets = targets.type_as(outputs)

loss = loss_fn(output, targets)

loss.backward()

optimizer.step()

training_loss += loss.data.item() * inputs.size(0)

training_loss /= len(train_loader.dataset)

model.eval()

num_correct = 0

num_examples = 0

for batch in val_loader:

inputs, targets = batch

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

inputs, targets = inputs.to(device), targets.to(device)

#inputs = inputs.to(device)

output = model(inputs)

#targets = targets.to(device)

#outputs = outputs.float()

#targets = targets.type_as(outputs)

loss = loss_fn(output, targets)

valid_loss += loss.data.item() * inputs.size(0)

correct = torch.eq(torch.max(torch.softmax(output, dim=1), dim=1)[1], targets).view(-1)

num_correct += torch.sum(correct).item()

num_examples += correct.shape[0]

valid_loss /= len(val_loader.dataset)

print('Epoch: {}, Training Loss: {:.2f}, Validation Loss: {:.2f}, accuracy = {:.2f}'.format(epoch, training_loss,

valid_loss, num_correct / num_examples))

train(resnet18.cuda(), optimizer, torch.nn.CrossEntropyLoss(), dl_train, dl_val, epochs=20)

Here are the statistics:

Epoch: 0, Training Loss: 0.70, Validation Loss: 0.67, accuracy = 0.58

Epoch: 1, Training Loss: 0.67, Validation Loss: 0.65, accuracy = 0.69

Epoch: 2, Training Loss: 0.66, Validation Loss: 0.64, accuracy = 0.66

Epoch: 3, Training Loss: 0.64, Validation Loss: 0.61, accuracy = 0.78

Epoch: 4, Training Loss: 0.63, Validation Loss: 0.59, accuracy = 0.80

Epoch: 5, Training Loss: 0.61, Validation Loss: 0.57, accuracy = 0.75

Epoch: 6, Training Loss: 0.60, Validation Loss: 0.56, accuracy = 0.73

Epoch: 7, Training Loss: 0.58, Validation Loss: 0.55, accuracy = 0.79

Epoch: 8, Training Loss: 0.55, Validation Loss: 0.54, accuracy = 0.80

Epoch: 9, Training Loss: 0.55, Validation Loss: 0.53, accuracy = 0.78

Epoch: 10, Training Loss: 0.54, Validation Loss: 0.50, accuracy = 0.82

Epoch: 11, Training Loss: 0.52, Validation Loss: 0.48, accuracy = 0.82

Epoch: 12, Training Loss: 0.50, Validation Loss: 0.46, accuracy = 0.85

Epoch: 13, Training Loss: 0.49, Validation Loss: 0.42, accuracy = 0.90

Epoch: 14, Training Loss: 0.47, Validation Loss: 0.43, accuracy = 0.87

Epoch: 15, Training Loss: 0.48, Validation Loss: 0.40, accuracy = 0.87

Epoch: 16, Training Loss: 0.46, Validation Loss: 0.40, accuracy = 0.89

Epoch: 17, Training Loss: 0.44, Validation Loss: 0.39, accuracy = 0.85

Epoch: 18, Training Loss: 0.42, Validation Loss: 0.34, accuracy = 0.89

Epoch: 19, Training Loss: 0.42, Validation Loss: 0.35, accuracy = 0.85